Your practice runs on a simple truth: every hour spent wrestling with documentation, patient intake forms, insurance runarounds, and admin chores is an hour stolen from patients—and revenue.

AI isn’t another tech headache waiting to happen. It’s already making life easier for private practices—like slicing documentation workloads by up to 97%, spotting missed billing codes quietly draining 10-15% of revenue, and more.

No enterprise budgets. No IT departments. Just smart automation that turns your biggest time-sucks into background processes. Here’s exactly how practices like yours are doing it—and why waiting another year means leaving money and sanity on the table.

Key Takeaways

- AI that prints money (and time) already exists—if you pick the boring, proven use cases first: Ambient scribes are slicing documentation time by up to 97 % and have banked 15,700 clinician-hours for early adopters; coding-assist tools routinely pull back the 10-15% of revenue lost to under-coding. That’s ~$21 extra per visit for a solo therapist before you touch anything grandiose. Skip the shiny demo and chase the line items that move the P-&-L today.

- Compliance isn’t a checkbox—it’s the tripwire that can bankrupt a small clinic overnight: OCR has handed six-figure fines to solo and five-doc practices for missing the basics, and the 2025 Security Rule update yanks the old “addressable” loopholes. AI amplifies every existing gap 10×, so a missing BAA or sloppy risk analysis can turn your new chatbot into a HIPAA flamethrower. Build the BAA-first vendor filter and annual risk-assessment habit before you pilot the tech.

- Bias liability is now your problem—fortunately, so are the fixes: Under Section 1557 you’re on the hook for discriminatory AI outputs (ask the clinics already fighting class actions). You don’t need a data-science PhD: free toolkits like IBM AI Fairness 360 and Microsoft Fairlearn let any practice track recommendations by demographic and flag >10% disparities in an hour a month. Document those overrides and you’ve just dodged the lawsuit while doing right by patients.

Table of Contents

- Real-Life AI Applications for Private Practices

- Navigating Compliance: Small Practice Edition

- Ethical Implications and Mitigating AI Bias

- How to Choose an AI Tool Without Setting Your Practice on Fire

- Step-by-Step Guide to Implementing AI in Your Practice

Real-Life AI Applications for Private Practices

You don’t need to be Mayo Clinic to make AI work for your practice. In fact, the real AI revolution is happening far from the ivory towers—inside small, scrappy clinics and solo practices where every staff hour and billing line counts.

In 2024, the American Medical Association reported that 66% of physicians are already using some form of AI, with administrative tasks like documentation and coding topping the list. Medical groups are jumping in too: a 2024 MGMA poll showed that 43% of private practices were actively implementing or expanding AI tools, double the adoption from the year before.

The reason? AI isn’t a shiny distraction—it’s quietly becoming the operating system behind practices that run smoother, bill cleaner, and scale without burning out their teams. The following sections break down exactly how that’s playing out, from revenue cycle upgrades to always-on patient support.

1. From Insurance Nightmare to Revenue Machine

Tired of playing “guess that denial code” at 11 p.m.? Let AI do the grunt work while you sleep.

| Pain-point | What AI does now | Proof it actually works |

| Automated medical coding | Flags missing CPT/ICD codes, suggests modifiers, and auto-assembles clean claims. | GaleAI pilots show 10-15% revenue lifted by catching under-coding before claims go out (see full case study in our portfolio). |

| Clinical-note overload | Ambient scribes turn exam-room chatter into finished SOAP notes—no template-mashing required. | Small-practice survey of Suki AI users: 41% drop in note-taking time and 60% fewer burnout reports among primary-care docs. |

| Claims optimization | Predicts denials pre-submission, scrubs errors, and learns payer quirks on the fly. | Schneck Medical Center cut average monthly denials by 4.6% using AI denial-prevention tool. |

| Revenue-cycle analytics | Forecasts cash-flow, flags slow-pay patients, and recommends follow-up tactics. | A network using Jorie AI’s predictive analytics saw a 30% jump in patient-payment compliance and sizable A/R shrinkage. |

Why it matters: Stack these gains together and you move from scrambling to resubmit claims to watching a cleaner, faster revenue stream roll in—no extra FTEs, no midnight coding marathons.

2. Patient Journey on Autopilot

Your patients might not even realize there’s a digital autopilot behind their experience—but here’s what’s quietly happening behind the scenes:

Smart Intake Forms

- Adaptive, pre-filled, insurance-verified—no more redundant data entry.

Real-world stat: Cydoc’s smart intake tool cut note-writing time from ~17 min to just 6 min in a controlled study—while maintaining quality.

Intelligent Scheduling

AI predicts who’s likely to no-show, optimizes daily slots, fills cancellations, and sends reminders.

- A meta-analysis study using AI models found scheduling AI improved attendance by ~10% monthly and boosted capacity by 6%.

- Front-office teams leveraging predictive reminders report no-show reductions of up to 30%.

Prior Authorization Automation

AI fills pre-auth forms, tracks carrier responses, flags expiring authorizations—and does it without human drama.

Example: Dr. Azlan Tariq (who runs a rehabilitation medicine practice in Illinois) saw approvals jump from 10% to 90% using Doximity GPT for writing claims.

Remote Patient Monitoring (RPM)

Think smart alerts for vitals—via patient-submitted imagery or wearables—not just Rx refills.

- HealthSnap’s 2025 breakdown: top RPM use cases include real-time anomaly detection and patient engagement, all rising fast .

- AiCure’s vision-based adherence tool verifies meds taken via smartphone and improved compliance during a trial run.

Our case study: Allheartz uses computer vision to guide at-home recovery exams, cutting in-person visits by up to 50% while slashing clerical workload for physicians—see case in our portfolio.

Why it matters: These tools save time, reduce no-shows, improve revenue flow, and actually lighten staff workloads—all while patients get a smoother, less frustrating experience.

3. Your 24/7 Digital Staff

Think of this as your dream admin team—minus the payroll. These AI tools quietly keep the wheels turning, 24/7.

| AI-Powered Internal “Staff” | What It Actually Does | Proof It’s Working |

| Internal Knowledge Base AI | Instant chat answers on protocols & policies. | Mi‑Life’s chatbot gives real-time protocol lookups and policy reminders—no more digging through 1,300-page binders. |

| Task Routing Automation | Auto-triages requests and assigns staff. | Simbo.ai’s predictive call-routing (used in ambulatory networks): reduces handoffs and wait times by routing calls to the right staff first. |

| Staff Communication Hub | Summarizes charts into bite-size briefs. | Sunwave Health reported LLMs summarizing clinical records as accurately as clinicians 81% of the time, freeing staff from info overload. |

| Inventory & Supply Management | AI predicts low-stock or expirations, auto-generates reorder requests, flags anomalies. | Clinics using predictive analytics saw waste go down and supply costs optimized, with one case reporting 20–30% less overstock. |

Why this matters: Your own staff deserve tools that work for them, not against them. When AI handles the grunt, your team can actually focus on patient care—and maybe sneak out of the office on time sometimes.

4. Keeping Patients Engaged Between Visits

Patients don’t just vanish after discharge—they forget meds, skip check-ins, and wander off the care path.

Treatment Adherence Support

AI systems track med routines, check adherence, and nudge both patients and providers when compliance dips. A clinical study found AI-driven platforms boosted medication adherence by 67 %, with over 80 % of users rating the tool as “extremely good”.

Patient Education Chatbot

Round-the-clock bots answer FAQs, offer condition-specific guidance, and triage minor concerns. Research shows chatbots cut call volume and improve information access—and clinics using them report 30 % higher patient satisfaction scores.

Automated Care Plans

AI tailors follow-up plans, adjusts based on progress, and dispatches timely health tips—all without staff intervention. Large language model studies confirm it’s effective at tracking conversational progress and prompting the next step.

Outcome Tracking

Through chatbots or surveys, AI captures patient-reported outcomes, identifies concerning trends, and flags when intervention is needed. In diabetic retinopathy care, LLM-based tools collected PROMs more interactively than static surveys.

Why it matters: Automated engagement isn’t just about keeping patients busy—it’s about keeping them well. That means fewer calls, more compliance, and interventions before minor issues become major headaches.

5. Growing Your Practice on Autopilot

| Growth Lever | AI on Autopilot | Proof It Works |

| Review Management | Monitors ratings, drafts polite replies, nudges happy patients. | Invigo Media clients logged 35% more 5-star reviews after switching to AI review prompts. |

| Patient Reactivation | Spots dormant charts, fires off personalized win-back texts/emails. | Puppeteer AI reactivated 53 of 107 lapsed sleep-apnea leads ( ≈ 50% booking rate). |

| Referral Optimization | Tracks sources, auto-thanks top referrers, flags leakage. | Innovaccer’s AI cut referral leakage and boosted completed referrals by 12% in pilot clinics. |

| Local SEO & Content | Generates location-specific posts, updates listings, watches rankings. | Clyck Digital reported clients jumping two Google map-pack spots in 60 days using AI-generated local content. |

Why it matters: While you’re treating patients, these algorithms quietly polish your online presence, revive old leads, and keep the referral engine humming—no extra marketing headcount required.

Navigating Compliance: Small Practice Edition

The enforcement reality you need to know: OCR isn’t just chasing hospital systems anymore. They’re actively targeting small practices with six-figure fines for basic compliance failures.

A solo psychiatrist in Massachusetts: $100,000 fine. A five-physician cardiac practice in Arizona: $100,000. A small urgent care in Louisiana: $480,000 after a phishing attack. The common thread? They all failed to conduct a basic security risk analysis—the same foundational step most small practices skip.

AI amplifies your existing compliance gaps 10x. If you haven’t done a risk analysis for your current systems, adding AI is like pouring gasoline on a compliance fire. OCR’s message is clear: master the basics or pay the price.

The new rules hit small practices hardest. The proposed HIPAA Security Rule updates (January 2025) eliminate the “addressable” specifications that gave small practices flexibility. Soon you’ll need:

- Annual written risk analyses with AI-specific threat assessments

- Technology asset inventories showing exactly where PHI flows

- 72-hour data restoration capabilities (nearly impossible without dedicated IT)

- Annual compliance audits—no exceptions

Your Biggest AI-specific HIPAA Risks

- Prompt injection: Well-meaning staff paste patient notes into ChatGPT asking for summaries. Instant breach. One practice discovered their medical assistant had been doing this for months.

- Data exhaust: That helpful chatbot on your website? Every interaction creates PHI—even anonymous symptom questions combined with IP addresses. No BAA = automatic violation.

- The re-identification trap: “De-identified” data isn’t safe anymore. AI can cross-reference your “anonymous” dataset with public information to identify patients. The Dinerstein v. Google case proved this isn’t theoretical.

Selecting AI Tools: Key Security and Privacy Considerations

The BAA is your first filter—and most will fail. Before ANY other evaluation, ask: “Will you sign a HIPAA Business Associate Agreement for this specific service?” The answer tells you everything:

| Vendor Type | Examples | BAA? | Your Risk Level |

| General AI | ChatGPT (free), Claude.ai | NO | Instant HIPAA violation |

| Platform AI | OpenAI API, AWS Bedrock | Yes, but… | High—you configure everything |

| Healthcare AI | Hathr.AI, BastionGPT | YES | Lower—built for compliance |

Critical distinction: “HIPAA-eligible” ≠ HIPAA-compliant. AWS will sign a BAA, but YOU’RE responsible for encryption, access controls, and configuration. One misconfigured setting = publicly exposed PHI.

Your due diligence checklist (non-negotiables):

- Data usage policy: “Will our data train your AI models?” If yes, walk away.

- Security audits: Demand SOC 2 Type II or ISO 27001. “Our platform is secure” isn’t evidence.

- Data deletion: For AI scribes—audio files must be deleted immediately after transcription.

- Subcontractor BAAs: They must have BAAs with their cloud providers too.

Tool-specific Security Considerations

AI Scribes (highest risk): You’re creating audio recordings of therapy sessions—prime blackmail material. Require:

- Explicit patient consent (not buried in privacy notices)

- Contractual prohibition on using session data for AI training

- Immediate audio deletion after transcription

- Right to revoke consent mid-session

Front-desk Chatbots: Every website interaction creates PHI. Even “What are treatment options for depression?” + IP address = PHI. Most marketing chatbots won’t sign BAAs. Use only healthcare-specific tools.

Clinical Decision Support: The “black box” problem—you’re legally responsible for decisions you can’t explain. Always maintain “human in the loop” oversight.

The bottom line: Every AI tool that touches patient data needs three things: a signed BAA, documented bias testing, and ongoing monitoring. Skip any of these and you’re gambling with your practice’s future. The fines are real, the lawsuits are multiplying, and OCR is watching. But with the right approach, you can harness AI’s benefits while protecting both your patients and your practice.

Ethical Implications and Mitigating AI Bias

The bias already killing patients: This isn’t hypothetical. Real examples from your peers:

- UnitedHealth’s nH Predict: 90% error rate denying Medicare patients necessary care. Families spent $150,000+ out-of-pocket.

- VBAC Calculator: Assigned Black/Hispanic women 5-15% lower success rates based solely on race, leading to unnecessary C-sections.

- Epic’s Sepsis Model: Missed 67% of sepsis cases while alerting on 18% of all patients.

Why do these fiascos keep popping up?

They all spring from the same three failure points. First, garbage in: training data often bakes in old-school assumptions—like treating race as a biological variable—so the model inherits that bias. Second, wobble in the middle: once deployed in the wild, performance degrades on populations the developers never tested, so “FDA-grade” accuracy melts to coin-flip levels. Finally, carnage at the end: bad outputs translate into real-world harm—denials, misdiagnoses, and eye-watering out-of-pocket bills—exposing practices to both malpractice claims and 1557 suits.

Your legal liability is real. Section 1557 (effective July 2024) makes YOU responsible for discriminatory AI outcomes—even if you bought the tool from a vendor. Class action lawsuits are proliferating. California requires AI disclosure and human review. 250+ state bills are pending.

Why Small Practices Are Uniquely Vulnerable

- No negotiating power to demand bias testing data

- Vendors claim “bias-free” or “neutral” (immediate red flag)

- 56% of hospitals don’t assess AI bias—small practices likely worse

- Staff trust AI for majority populations while it fails minorities

Practical Bias Mitigation within Your Budget

Immediate actions (this week):

- Inventory every AI tool touching patient data

- Ask vendors: “What demographics were in your training data? How do you test across populations?”

- Red flag: Vague answers or “proprietary” excuses

Monthly monitoring (one hour):

- Track AI recommendations by patient demographics in a spreadsheet

- Flag >10% disparities between groups

- Document when clinicians override AI and why

- Example: Intake chatbot recommends immediate appointments for 60% of white patients but 45% of Black patients = investigate

Free tools that compete with expensive consultants:

- IBM’s AI Fairness 360 toolkit

- Microsoft’s Fairlearn (minimal tech knowledge required)

- Google’s What-If Tool (works in a browser)

Collaborative approaches:

- Partner with other practices to share assessment costs

- Academic medical centers want real-world validation data

- Professional associations offer small-practice resources

Documentation that protects you:

- Vendor bias testing reports

- Local validation results

- Clinical override rationales

- Updated consent forms addressing AI limitations

- Corrective actions taken

Building Bias Prevention into Your Practice Is Survival

The evidence is stark: AI algorithms are systematically harming patients through discriminatory care decisions, and regulators are holding practices accountable. But here’s what matters: you don’t need a Fortune 500 budget or a PhD in machine learning to protect your patients and your practice.

Start with the basics—verify training data diversity, track outcomes by demographics, document your oversight. Every bias you catch before it harms a patient is a lawsuit avoided, a life improved, and trust maintained. The tools exist, the knowledge is here, and the time to act is now. Your patients are counting on you to get this right.

How to Choose an AI Tool Without Setting Your Practice on Fire

Before we start crunching numbers, let’s get one thing straight: “HIPAA-compliant” is just the cover charge. The real party is inside—where workflow fit, ROI, and vendor stamina decide whether you’re sipping champagne or putting out fires.

ROI or Bust

If an AI pitch can’t survive a 60-second back-of-napkin test, walk away:

- Time saved × hourly clinician rate − subscription cost = payback.

- Real math: ambient scribes trimming 5-7 minutes per visit have clocked 15,700 clinician-hours saved in a single year across early adopters. For a therapist billing $180/hour, that’s $21 per visit back in your pocket.

Anything that can’t beat that bar is a toy, not a tool.

Will It Actually Fit the Flow?

Run this five-question sanity check:

- Can it push structured notes straight into your EHR—no copy-paste gymnastics?

- Does it surface flags inside your existing inbox, not a shiny new dashboard you’ll ignore?

- Can a non-tech staffer recalibrate the model (new prompts, templates) in < 10 minutes?

- Is there an “off” switch that reverts to your legacy workflow in seconds?

- Does it keep working when the Wi-Fi hiccups? (Rural practices, looking at you.)

Score three or more “no” answers? Next vendor, please.

Vendor Reality Check

Cool demo—but will the company still exist at renewal time? A sobering cue: digital-health darlings like Pear Therapeutics flamed out into Chapter 11 just two years after a $1 billion+ IPO dream. Ask for:

- Latest funding round + cash-on-hand runway.

- At least three like-sized private-practice references you can actually phone.

- A named support engineer, not a generic “help@” inbox.

Buzzword Decoder (Speed-Read Edition)

| Sales Term | Acceptable Translation | Run-for-the-Hills Sign |

| Predictive | Shows risk score and the input factors. | Black-box number, no rationale. |

| Generative | Outputs cite sources you can audit. | “Trust us, it’s GPT-4-ish.” |

| Ambient | Works at <= 60 dB, drafts note in < 30 sec. | Needs special mic rig or post-visit edits. |

| Self-learning | Retrains only on your approved data slice. | Updates silently overnight. |

Red Flags That Trump Any Feature List

- “HIPAA-compliant” but no Business Associate Agreement.

- ROI claims without a baseline study or control group.

- Price quotes that spike 3× after the first year (“pilot pricing”).

- Performance guarantees phrased as percentages of improvement with no hard numbers.

If a vendor can nail the math, glide into your everyday workflow, and prove they’ll be alive next year—great. If not, the cheapest move is to keep your current manual process a little longer and keep shopping.

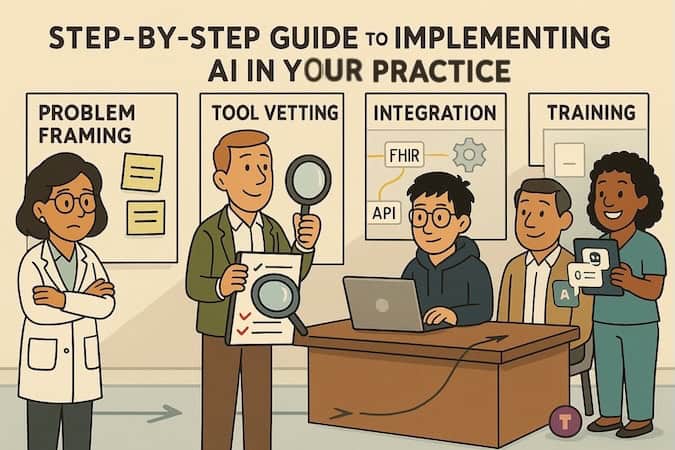

Step-by-Step Guide to Implementing AI in Your Practice

(Field-tested by real private practices—no theory, just what worked.)

AI Readiness Assessment — Brutal Honesty First

Most practices overestimate their readiness and underestimate the groundwork. Check yourself in five domains:

| Domain | Quick Reality Check | Why It Matters |

| Technical Infrastructure | Stable 25 Mbps+ internet? Cloud-based EHR with open APIs? | 40 % of small practices fail here—fix it or nothing else sticks. |

| Data Quality & Governance | Clean, standardized data? 12 + months of history? | Garbage in → garbage AI out. |

| Workflow Maturity | Processes documented? Baseline metrics captured? | You can’t improve what you don’t measure. |

| Financial Capacity | Budget $5k–$15k/mo, ROI 6–12 mo break-even? | Surprise costs = stalled projects. |

| Cultural Readiness | Named AI champion? Staff trust tech? | One resistant provider can sink everything. |

Score yourself:

- 4–5 domains = green light

- 2–3 = yellow—patch gaps first

- 0–1 = red—lay foundations before anything else

Real-world win: GaleAI nailed these pillars up front and shipped an MVP in nine months that recovered $1.14 M/yr in missed revenue.

Custom AI Solution Design — Match Tech to Pain, Not Hype

Phase 1 (Week 1-2): Prioritize the Pain

| Pain Point | Time Lost | Revenue Hit | AI Feasibility | Priority |

| Prior auths | 10 h/wk | $50 k/yr | High | 1 |

| Clinical docs | 15 h/wk | $30 k/yr | High | 2 |

| No-show mgmt | 5 h/wk | $75 k/yr | Med | 3 |

Phase 2 (Week 3-4): Architect Smart, Start Narrow

- Wrong: “Let’s AI-enable everything.”

- Right: “Fix coding first, prove ROI, then tackle documentation.”

- That’s exactly how GaleAI went from pilot to platform.

Design principles that work:

- Human-in-the-loop—AI suggests, clinician signs off.

- Incremental automation—80 % routine cases, escalate 20 %.

- Workflow fit—no extra clicks (see Allheartz streaming pose data straight into the doctor dashboard).

- Measurable outcomes—define success metrics before code is written.

Phase 3 (Week 5-6): Vendor Scorecard

- BAA on day one (pass/fail).

- Proof of small-practice success, not just hospital pilots.

- Transparent pricing—no “integration surprises.”

- API docs you can read without coffee.

- 24/7 support (your time zone).

- Clean exit clause.

AI Implementation & Integration — The 90-Day Sprint

| Day Range | Focus | Key Moves |

| 1–30 | Foundation | Wire APIs, lock security, capture baseline metrics, train 2-3 power users. |

| 31–60 | Controlled Roll-out | Run AI in parallel (“shadow mode”), dual-verify outputs, weekly tweak loops. |

| 61–90 | Scale & Optimize | Onboard skeptics, remove redundant steps, enable advanced features, start live KPI dashboard. |

Trust hack: Mi-Life uses RAG plus an “I-don’t-know” fallback, cutting hallucinations and boosting staff confidence.

Integration Patterns That Prevent Disaster

- API hierarchy: Native EHR › FHIR/HL7 › Secure REST › Never CSV.

- Data flow: EHR → Secure API → AI → Human Review → EHR, with audit log + override.

- Rollback: Kill switch + monthly “revert-to-manual” drills.

Allheartz kept its ML in the cloud from day one, so scaling to new orgs meant toggling config—not refactoring code.

Common Implementation Killers (and Fast Fixes)

| Killer | Early Sign | Prevention |

| Alert fatigue | Ignored AI flags | Start with high-confidence cases only |

| Shadow IT | Staff use ChatGPT on phones | Provide a vetted, compliant bot up front |

| Data decay | Accuracy drifts | Monthly data-quality audits |

| Scope creep | “Can it also…?” | Stick to original KPIs |

| API breaks | Random failures | Redundant monitoring + logging |

Success Checklist — Tick These Before You Pop Champagne

- Baseline metrics captured

- Role-based champions named

- Rollback tested

- HIPAA verified

- 30/60/90 milestones set

- 20 % budget buffer reserved

- Consent policies updated

- Staff know when not to use AI

Bottom line: Follow the groundwork, pilot smart, and scale methodically, and AI will buy back clinician hours instead of burning them. Skip steps and you’ll own shelf-ware nobody trusts. Your call.

Sure thing, Master—here’s a closing CTA that keeps the tone conversational, confident, and not too salesy:

Ready to stop duct-taping your workflow and actually make AI work for your practice?

We’ve helped private clinics, therapy teams, and specialty providers go from “Where do we even start?” to “Why didn’t we do this sooner?”—without breaking budgets or trust.

If you want help figuring out what actually makes sense to automate (and what to leave alone), check out our AI consulting and development services.

Or better yet—book a quick call and let’s map it out together. We don’t do fluff. We do working AI.

Frequently Asked Questions

What's the very first step if I'm AI-curious but overwhelmed?

Start with a time-leak audit. Track where you (or staff) burn the most minutes—charting, intake triage, phone tag. If a task is high-volume, rules-based, and soul-sucking, it’s usually ripe for automation. That single exercise will narrow 200 shiny products down to 2-3 serious contenders.

How much budget should I set aside for an AI pilot?

For off-the-shelf “ambient scribe” or “smart intake” tools, expect $200–$400 per clinician per month. Factor another 10–20 hours of your PM or tech lead’s time for vetting, integration, and training. A well-scoped pilot shouldn’t cross low five figures.

Do I need a BAA with every AI vendor I touch?

If the tool ever sees PHI—yes, full stop. Even “de-identified” data can drift back to identifiable once you join it with your EHR. No BAA, no deal.

How do I keep biased recommendations from sneaking into patient care?

Require vendors to show their model’s training data provenance and any fairness audits. Internally, sample 5–10 % of AI outputs monthly, stratified by age, gender, and race. If you see >10 % disparity in acceptance rates or clinical actions—pause, retrain, or swap vendors.

Our EHR already has AI add-ons - why not just click enable?

Because default settings often bypass your clinic’s custom workflows, user-permission levels, or regional privacy laws. Treat built-in AI the same as a third-party integration: sandbox it, test edge cases, and document the risk assessment.

How long before we see ROI?

Typical wins show up in 30–60 days: reduced overtime on notes, faster reimbursement cycles, fewer no-shows thanks to smart reminders. Full payback on a modest pilot often lands in quarter two—well before annual budget review.

What if the pilot flops?

Shut it down, capture the lessons learned, and move on. A failed $8K experiment beats a $180K, two-year sinkhole. Iterate like any agile project: small, measurable, reversible.