It’s 7:42 a.m. Monday. The portal is stacked, RPM is chirping like a slot machine, and your on-call left three “please fix this” notes. An AI triage system isn’t a moonshot here—it’s plumbing: take messy symptoms, vitals, and messages; explain the why; and route to the one queue that can actually act. Assistive first, human-in-the-loop always, with rationale your clinicians can scan in five seconds and an audit trail an auditor can follow in fifty.

We wire FHIR write-backs, PM scheduling hooks, and conservative after-hours defaults so next steps happen, not just get labeled. And we measure the boring things that move ROI—minutes saved, re-contacts avoided, mix shift to self-care—so finance nods while clinicians keep using it.

This guide shows how to ship that, fast.

Key Takeaways

- Triage isn’t a model; it’s a conveyor belt. If the suggestion doesn’t become a routable task with EHR write-backs, PM scheduling, and a telehealth handoff, you’re labeling—not triaging. The architecture, not the algorithm, drives outcomes.

- Ship assistive-first and stay on the CDS side of the line. Human-in-the-loop, rationale before result, immutable audit trails, and a kill switch keep you compliant and trusted; LLMs summarize text, they don’t diagnose. Push autonomy only where the data and clinicians prove it.

- Prove ROI by cohort, then scale where the math clears. Track cost/triage vs minutes saved, disposition mix shift, and safety signals—channel by channel (after-hours usually wins first). Beware “task shifting,” denial leakage, and imaginary deflections; hit exit criteria over 2–3 sprints before expanding.

Table of Contents

- Why AI Triage Now (and When Not To)

- Define the Win: Outcomes, Guardrails, Scope

- Architecture at a Glance

- Data Plumbing You Can’t Skip

- Build vs Buy vs Hybrid

- Implementation Plan

- Human-in-the-Loop That Clinicians Trust

- Compliance and Risk — What Actually Matters

- Integration Patterns That Survive Production

- Model Strategy Without the Hangover

- Measuring ROI — No Spreadsheet Yoga

- How Topflight Can Help with AI Triage

Why AI Triage Now (and When Not To)

Your clinicians are drowning in portal messages and “Is this urgent?” DMs, while budgets and headcount aren’t exactly doing jumping jacks. AI triage healthcare earns its keep by doing first-pass intake, risk stratification, and routing—fast—so humans spend time where it’s clinically (and financially) worth it. That starts with precise symptom assessment, recent medical history, and trend-aware vital signs—not more forms.

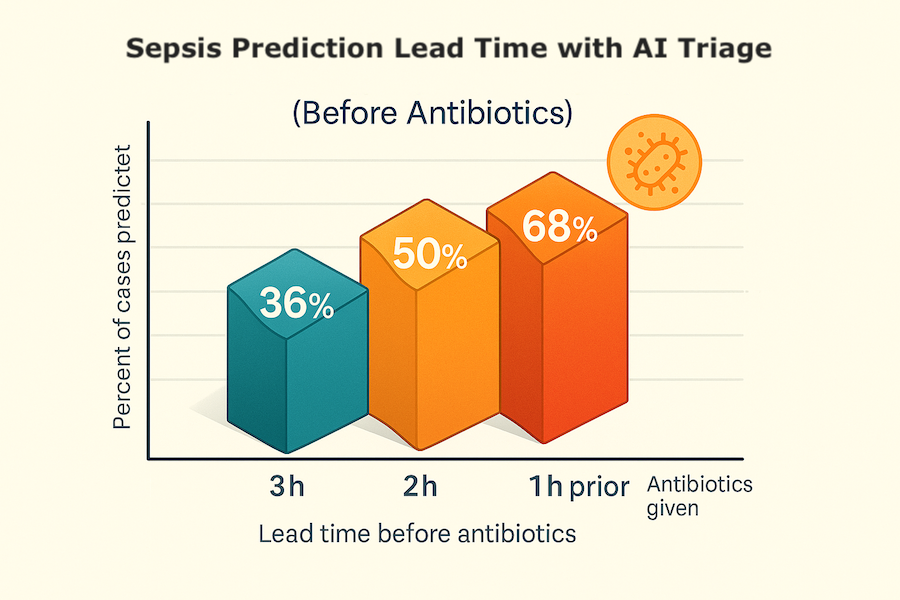

Quick reality check: at Cleveland Clinic, mean quarterly patient-initiated messages climbed from 340 to 695 between late-2019 and late-2021; every +10 messages correlated with ~12 extra minutes of after-hours EHR time per clinician.

Translation: inbox sprawl directly taxes nights and weekends. And nationally, portal traffic spiked at the pandemic onset and has stayed elevated, not returning to pre-COVID baselines.

Where AI Triage Shines

- Telehealth intake: compresses history-taking into a structured, clinician-ready summary.

- RPM escalations: combines trend-aware vitals + symptoms to avoid alert fatigue.

- After-hours coverage: safe defaults + clear handoffs by morning.

- Navigation: directs low-acuity cases to self-care, nurse line, or next-available slot.

Call this triage automation: lightweight rules plus risk scoring that turn messy narratives into actionable dispositions, each with a rationale and a route through your clinical workflows.

In ED literature, ML/NLP consistently improves triage accuracy and consistency compared with manual-only approaches—useful signal even if you stay assistive.

Hard NOs

- High-acuity or red-flag presentations (e.g., chest pain + syncope) without immediate human review.

- No labeled data or feedback loop—your model will drift into fiction.

- Black-box vendors with no rationale/audit trail and no BAA.

- Weak governance: unclear medico-legal ownership, no kill switch, no bias monitoring.

- Fragile plumbing: EHR/PM fragmentation that blocks disposition from turning into action.

Do this, and you’ll cut time-to-disposition and nurse minutes per case without gambling on patient safety.

Define the Win for AI in Triage: Outcomes, Guardrails, Scope

Before anyone wires a model, agree on what a “good day” looks like. For AI in triage, “done” means measurable lift on speed, safety, and cost—without creating new operational debt. That definition should align with your clinical workflows, support clean EHR integration, and be expressed in metrics your finance team and clinicians both respect.

Think of this as optimizing triage efficiency: faster decisions with fewer touches, while preserving reviewability and improving patient outcomes.

Outcomes: Track These from Day One

- Time-to-disposition (median): baseline → target (e.g., −30–50%).

- Nurse minutes per case: documentable reduction (not task-shifting).

- Disposition mix shift: more self-care/async, fewer unnecessary live visits.

- After-hours pickup SLA: e.g., 08:00 local with escalation exceptions.

- Safety: false-negative rate, 72-hour re-contact, override rate (by reason).

- Patient experience: “understood next step?” yes-rate; no-show reduction for routed visits.

- Cost/unit: $ per triage (inference + platform) vs. staff time saved.

Guardrails

- Human-in-the-loop thresholds: any red flag or ≥2 amber → immediate review.

- Rationale surfaced to clinicians; confidence bands, not vibes.

- Immutable audit trail: inputs, reasoning snapshot, disposition, override + signer.

- Privacy by design: PHI scoping, least privilege, retention window (supports HIPAA compliance).

- Bias monitoring: performance by language/age/sex/race where appropriate.

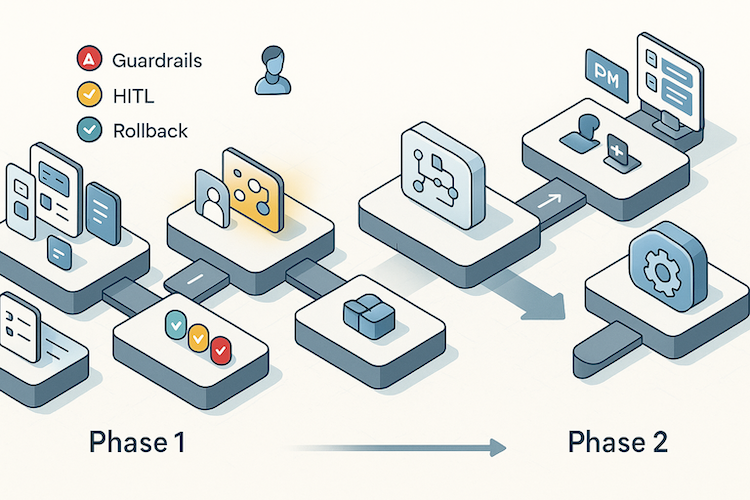

- Shadow-mode rollout before touching live routing.

- Kill switch + rollback criteria; feature flags for staged rollout.

Scope

Scope keeps clinical triage honest—define channels, populations, data sources, and autonomy so you ship value without overreach.

- Autonomy level: assistive now; limited autonomy later (if ever).

- Channels: portal/app, SMS/IVR, telehealth waiting room.

- Populations & languages: start narrow; expand with evidence.

- Data sources: symptom form, prior history/meds, RPM vitals, recent encounters.

- Out-of-scope (for now): high-acuity autonomy, med changes, diagnostic claims.

Definition of done: outcomes trending to target for 2–3 consecutive sprints, safety stable or improving, and no net new bottlenecks downstream.

AI Triage Architecture at a Glance

A production-grade AI triage system isn’t “a model.” It’s a pipeline that ingests messy signals, reasons transparently, and turns risk into routable work your ops can actually fulfill. Think of it as the backbone of digital triage—built for uptime, explainability, and handoffs that actually happen.

1. Ingestion and Identity

- Channels: app/portal forms, secure messaging, IVR/SMS, RPM device streams.

- Identity & consent: auth, patient matching, consent scope/timebox.

- Pre-validation: required fields, red-flag fast-path to human.

2. Normalization and Feature Prep

- Map inputs to FHIR (QuestionnaireResponse, Observation, Condition, MedicationStatement).

- Unit normalization, de-dup, timestamp sanity, device noise filtering.

- Context hydrate: recent encounters, problems, meds/allergies, prior dispositions.

3. Reasoning Layer (Ensemble, Explainable)

- Rules aligned to clinical guidelines: protocol checklists/pathways for deterministic exclusions and red flags.

- Machine learning classifiers: symptom patterns, risk scoring, false-positive suppression.

- Natural language processing: converts narratives to structured features + rationale summary (with guardrails/RAG).

- Output: disposition suggestion, confidence band, rationale atoms (the why).

4. Orchestration and Disposition

- Apply guardrails (from S2): any red or ≥2 amber → human review.

- Create tasks/orders: FHIR Task/ServiceRequest, or note insert with routing tag.

- Scheduling handoff: PM/telehealth queue; idempotent tickets; backpressure aware.

- Notifications: patient guidance + internal alerts, all immutably logged.

5. Human-in-the-Loop UI

Clinician control by design; see Human-in-the-Loop below for rationale, overrides, and feedback patterns.

6. Safety, Privacy, Compliance

- PHI scoping/least privilege; encryption in transit/at rest; masked logs.

- Immutable audit trail (inputs, model version, prompt/context, outputs, signer).

- Feature flags, shadow mode, kill switch; deterministic fallback pathways.

- Bias surveillance: performance by cohort/language; alert on regressions.

For emergency triage or emergency department–adjacent flows, keep automation assistive only and force immediate human review on red flags.

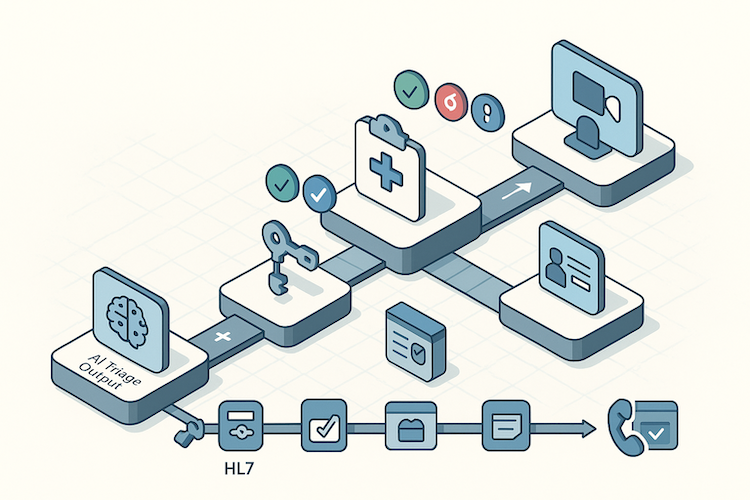

7. Integration Buses (Write-Back That Matters)

- EHR: notes/tasks/orders/inbox messages; status callbacks.

- PM/RCM: eligibility, copay, scheduling, visit types.

- Telehealth: warm handoff to waiting room or asynchronous queue.

- RPM: vitals trend ingestion; anomaly back-pressure rules.

Built-in interoperability here is what turns suggestions into care.

8. Observability and Model Ops

- Dashboards: time-to-disposition, nurse minutes saved, override rate, safety events.

- Canary releases, A/B, version pinning; automated rollback.

- Drift detection; golden-set replays; retraining cadence with approval gates.

9. Reliability Engineering

- Queues + retries with idempotency keys; rate limits; circuit breakers.

- Degraded mode playbooks (e.g., rules-only) during upstream outages.

- SLOs and on-call rotation with clear “press red button” procedures.

Data Plumbing for AI-based Triage Systems

Most failed pilots aren’t killed by the model; they’re killed by data soup. An AI-based triage system lives or dies on identity, consent, normalization, and provenance. Treat the plumbing as a first-class feature, or you’ll be debugging “why did this route to urgent care?” at 2 a.m. For patient triage to be safe and useful, your patient data and medical history must be normalized and provenance-rich—otherwise every “disposition” is just guesswork.

Minimum Viable Data — Ship This First

- Structured symptom intake + free-text note (don’t choose; use both).

- Active problems, meds/allergies, and last 3 encounters (explicit medical history).

- Vitals trends (7–14 days) if devices are reliable.

If RPM is in scope, borrow your playbook from remote patient monitoring app development—trend windows, outlier handling, and device provenance are table stakes.

FHIR Mappings That Actually Matter

Map what you will act on: QuestionnaireResponse (intake), Observation (vitals), Condition (problems), MedicationStatement/Request (meds), AllergyIntolerance (allergies), and Encounter (context).

Normalize units (mmHg, mg/dL vs mmol/L), de-dup records, fix timestamps, and hydrate with the last meaningful clinical touch. If a field won’t change routing or guidance, don’t ingest it yet—data minimalism reduces risk and accelerates go-live. Done right, this is healthcare IT that enables workflow optimization, not another click farm.

Quality Pitfalls That Derail Pilots

- Unit chaos: glucose in mmol/L looks “hypoglycemic” if you assume mg/dL.

- Clock drift: device timezones and server time cause phantom “night fevers.”

- Free-text only: no coded terms → no reliable routing or analytics.

- Duplicate devices: two watches, one patient; trend lines implode.

- Thin history: cold starts force over-cautious dispositions.

Privacy isn’t a footer—scope PHI to least privilege, encrypt everything, mask logs, and set retention with a purpose. Capture provenance (source system, device ID, who touched the record) so overrides teach the system, not just your on-call.

Clean pipes turn triage from “interesting demo” into dependable operations. With data settled, Build vs Buy vs Hybrid becomes an economic choice—not a rescue mission.

Build vs Buy vs Hybrid

There isn’t a universal right answer—only the one that fits your risk, timeline, and data reality. The trap is thinking you’re choosing a model. You’re choosing an operating model: who owns safety, who controls updates, and who carries the pager at 2 a.m. Also: which approach best improves patient care without slowing healthcare providers down.

When to Buy

Buy if you need a fast, bounded pilot with clear guardrails and your use case matches a vendor’s proven pathway (e.g., telemedicine intake or ED-adjacent screening with conservative defaults). You’re trading flexibility for speed and validated safety workflows. Make sure the contract gives you:

- BAA

- model/version transparency

- rationale exposure

- export of your data and labels

- a negotiated exit ramp (including a grace period to replicate workflows)

Good fit: narrow intake flows, minimal customization, standard integrations already supported, baseline diagnostic accuracy validated on similar cohorts.

When to Build

Build when triage logic must reflect your unique workflows, your EHR/PM landscape is…creative, or data governance is non-negotiable (research use, in-house labeling, private PHI). You own the roadmap and the risk. Ship assistive first, keep rules in front of ML, and invest early in observability and shadow testing.

Good fit: multi-channel intake, complex routing, or specialty populations where care coordination and explainability matter more than vendor velocity.

Why Hybrid Wins Most RFPs

Start with a vendor for the reasoning core (rules + predictive analytics) and surround it with your plumbing: intake UX, FHIR/PM adapters, safety gates, audit, and analytics. Over time, replace black-box pieces with your models where it pays off. This avoids year-long builds while keeping architectural sovereignty across your healthcare systems.

Cost Model to Compare Apples

- Buy: platform + per-triage fees, integration setup, change orders.

- Build: engineering + labeling + hosting/inference + monitoring + on-call.

- Hybrid: smaller platform fee + your integration/SRE costs; phased swap-outs reduce TCO and improve healthcare efficiency via targeted ownership.

Bottom line: default to hybrid unless you have an urgent pilot (buy) or a differentiated workflow you can’t express without source control (build). Whatever you pick, demand explainability, real-time analysis where it truly helps, alignment with clinical protocols, and hard rollback paths—AI triage software without those is just a liability with a dashboard.

Implementation Plan

You don’t “launch AI,” you operationalize it. A credible AI triage implementation moves from invisible learning to assistive impact without betting the clinic on day one.

Weeks 0–1 — Align and Baseline

Start with the boring bits that save you later. Lock success metrics:

- time-to-disposition

- nurse minutes per case

- disposition mix

- override rate

- safety events

and write the rollback criteria in plain English.

Capture a two-week baseline from current workflows. Draft red-flag/amber-flag rules and the after-hours SLA. Engineers wire feature flags and an immutable audit log; clinicians sign the escalation playbook so governance isn’t “coming soon.”

Weeks 2–3 — Shadow Mode and Wiring

Plug into intake channels and the EHR/PM rails, but keep the model in shadow. It reads every case, proposes a disposition, and logs rationale—clinicians never see it yet.

Run golden-set replays nightly to sanity-check risk scores and surface patterns. Build the human-in-the-loop UI with rationale atoms, confidence bands, and one-tap overrides (with reasons). Train staff with synthetic cases so the first live day isn’t their first look.

Ship tests that matter: idempotent task creation, retry/circuit-breaker behavior, and “press red button” kill switch drills.

Weeks 4–6 — Assistive Launch (Limited Scope)

Flip the flag for one channel, one population, one shift. Clinicians see suggestions alongside the reasoning snapshot; they remain the decision-makers.

Track overrides and categorize them:

- missing data

- wrong threshold

- poor guidance

Start with conservative defaults (more “review” than “self-care”) and relax only when the metrics move the right way. Add patient-side guidance messages templated by acuity and language.

If a regression hits (safety signal, override spike), roll back without drama—because you wrote the rule in Week 1.

Weeks 7–10 — Tune, Prove, and Scale

Now do the unglamorous work that creates ROI. Use override taxonomy to patch rules, adjust thresholds, or retrain small models. Add canary releases and version pinning so updates don’t surprise night shift.

A/B test guidance copy and routing targets to reduce no-shows. Expand channels (e.g., secure messaging) and populations as evidence accrues. Close the loop with weekly ops reviews:

- show time-to-disposition deltas

- nurse minutes saved

- any safety incidents with corrective actions

Exit criteria: two to three consecutive sprints with targets hit or trending, a stable (or improving) safety profile, and no new downstream bottlenecks. At that point, you’re not experimenting—you’re operating.

Next, we’ll make the human-in-the-loop UI something clinicians trust at a glance.

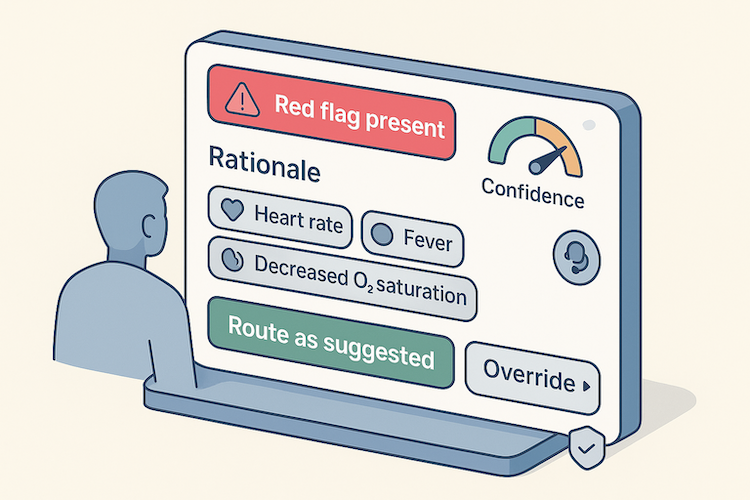

Human-in-the-Loop That Clinicians Trust

Trust isn’t a tooltip—it’s earned in the five seconds a clinician scans the screen. AI-powered triage succeeds when the UI feels like a competent junior: transparent, humble, and easy to overrule.

Rationale, Not Mystery

Show the why before the what: key symptoms/vitals, relevant history, and the rules or signals that triggered the suggestion. Pair it with a plain-language rationale and a discreet confidence band. If a disposition can’t be justified in one short paragraph, downgrade it to “needs review.”

One-Tap Control, Not Homework

The default workflow should be: glance → accept or override.

- Primary action: “Route as suggested.”

- Secondary: “Override” with a short, curated reason list.

- Always visible: escalation to human consult and a “red flag present” banner.

Feedback That Actually Teaches

Every override writes structured feedback to the audit trail—inputs, model/version, rationale snapshot, disposition, signer, and override reason. Those reasons drive weekly tuning (rules first, models second), and they’re the backbone of your drift and safety dashboards.

Respect Attention—and Patients

Keep cognitive load low: stable layout, readable typography, zero pop-ups. Localize instructions; surface critical ED/urgent-care guidance to the patient with the same clarity. When in doubt, bias to safety and make the escalation path obvious.

Design for explainability and control, and clinicians will use it. Ship mystery boxes, and they’ll ignore it. Next up: bake the same discipline into compliance so auditors nod instead of flinch.

Compliance and Risk — What Actually Matters

Compliance isn’t a paperwork sprint; it’s architecture. For healthcare app AI triage to survive audit and scale, lock three decisions early:

Data Exposure Model

Scope PHI to least privilege; encrypt everywhere; no PHI in logs. Redact/ tokenize before any LLM call; pin region (US-only) and sub-processors under your BAA chain. Keep a living data-flow diagram and deny by default. “De-identified for training” still needs lineage and re-ID risk controls.

Clinical-Claim Boundary

Stay inside CDS enforcement discretion by making outputs transparent, non-determinative, and reviewable by a professional—with an obvious confirm step.

If your triage suggestion drives diagnosis/treatment without independent review, you’re in SaMD territory: change control, post-market surveillance, and validation evidence aren’t optional.

Auditability and Lifecycle

Immutable trails:

- inputs

- model/version

- prompts/context

- outputs

- signer

- override reason

Bias surveillance by cohort/language. Release discipline: canaries, version pinning, rollback, and golden-set replays on every update.

ONC/EHR Reality

USCDI coverage + FHIR R4 read/write is table stakes; PM system hooks for eligibility/scheduling prevent “stuck” dispositions. Avoid info-blocking pitfalls—document how patients and external apps access the same data.

Policy-as-Code

Gate PHI access, cross-border calls, red-flag handling, and kill-switch rules in code (OPA or equivalent). If it can’t pass audit in a screenshare, it’s not ready.

Use a HIPAA compliant app development baseline, then add FDA/ONC layers as your claims grow. Minimize, explain, gate—that’s the difference between compliant and merely careful.

Integration Patterns That Survive Production

You don’t have an AI feature until routing lands where ops can act. Here’s the skinny on AI triage integration that doesn’t melt on Mondays:

EHR Write-Backs That Move Work

- FHIR objects that matter: Task (owner/due/status), ServiceRequest (labs/referrals), CommunicationRequest (inbox), Observation (structured vitals), plus a note addendum for rationale.

- Status callbacks that mark done / blocked / needs info—no free-text limbo.

- Bridge HL7v2 only where FHIR gaps exist; keep mappings versioned.

PM/RCM Rails

- Idempotent appointment creation with dedupe keys to prevent double-booking chaos.

- Persist payer plan in the audit trail to win downstream disputes.

Telehealth Handoff That Doesn’t Melt on Mondays

- Single pattern: queue to waiting room with reason code + triage summary

- attempt warm handoff, fallback to async message if no immediate availability.

Treat this like telehealth app development: SLOs, no-show logic, language routing.

Cross-Cutting Ops You’ll Actually Use

- Correlation IDs end-to-end + per-adapter SLO dashboards so failures are findable.

- Daily reconciliation: created tasks/appointments vs completed actions—catch silent drops.

- Backpressure rules to prevent alert floods during upstream outages.

Model Strategy Without the Hangover

This is AI in healthcare done like safety-critical software. In AI medical triage, you minimize unsafe shortcuts while reducing clinician minutes:

- rules first

- small supervised models second

- LLMs only when text chaos blocks progress

Rules Set the Guardrails

- Encode red/amber flags, disposition thresholds, and “never-autonomy” zones.

- Keep rules human-readable and tested—audits love obvious.

When Rules Plateau, Add ML

- Use calibrated, small, updateable models; learn from your override taxonomy (not vibes).

- Favor weekly retrains and simple features you can explain.

Use LLMs for Text Wrangling, Not Diagnosis

Summarize narratives into structured features, turn free text into guideline-aligned checklists, normalize multilingual intake—then hand back to rules/ML.

Stay within clinical decision support systems boundaries; keep prompts templated and retrievable.

Evolve on Evidence

Weekly: apply override-driven rule fixes, then lightweight model tweaks; ship with canaries/pins/rollbacks from your Model-Ops playbook.

Boring reliability beats leaderboard glitter: suggestions that make sense, are easy to overrule, and get better every sprint without rewriting the playbook.

Measuring ROI — No Spreadsheet Yoga

Finance doesn’t fund vibes. Treat AI triage for existing apps like any ops investment: unit economics first, rollout only where the math clears.

Step 1 — Define the Unit

Cost/triage = (platform fee + inference + monitoring/SRE + amortized build & validation) ÷ monthly triage volume.

Value/triage = (staff minutes saved × fully loaded clinician rate) + (avoided after-hours vendor/locum fees) + (capacity reclaimed for reimbursable work).

Step 2 — Add Safety and Trust Multipliers

- Safety debit = (false-negative rate × cost per adverse follow-up) + compliance overhead per event.

- Trust debit = (override rate × review minutes × clinician rate).

Net value/triage = Value − Safety debit − Trust debit.

Step 3 — Prove It by Cohort, Not Globally

Run separate ledgers for: portal intake, secure messages, RPM alerts, and after-hours. You’ll find ROI is lopsided (after-hours usually clears first).

What to Track

- Time-to-disposition (median, p90) vs baseline.

- Nurse minutes per case (with observation window).

- Disposition mix shift (self-care/async vs live).

- Override rate (by reason), 72-hour re-contact, safety events.

- Cost/triage trend vs volume (learning curve).

- Payback period = initial investment ÷ monthly net value.

Avoid Common Mirages

- “Task shifting” masquerading as savings (RN minutes down, MA minutes up).

- Denial leakage from bad scheduling/eligibility hooks.

- Counting “deflected visits” that were never going to happen.

Roll these numbers into your broader healthcare app development cost model so triage isn’t an orphan line item. If payback doesn’t tighten after two to three sprints, you don’t have an ROI problem—you have a data, integration, or trust problem. Fix those, then re-measure.

How Topflight Can Help with AI Triage

If you want a triage feature that clinicians actually use—and auditors don’t side-eye—we’ll help you ship the boring, reliable version: clear guardrails, routable outputs, and measurable ROI.

What we bring to the table

- AI strategy + architecture that survives audit. We design the ensemble (rules/ML/LLM), wire FHIR/HL7 safely, and stand up private LLM/RAG with Azure/Claude under a BAA—plus the MLOps you’ll need on day 2.

- Clinical decision automation without black-box vibes. Rationale-first UX, override taxonomy, drift monitors, and version-pinned releases—so updates don’t surprise night shift.

- Patient engagement + routing that closes the loop. Intake, guidance, and follow-ups integrated with EHR/PM rails (eligibility, scheduling, claims hygiene).

- Proven outcomes in adjacent AI builds. For Allheartz (AI-assisted RTM), we delivered up to 50% fewer in-person visits, 70% injury reduction, and 80% less clerical work—by pairing computer vision with clinician-ready workflows. Translation: we know how to turn signals into safer ops.

- Revenue stories that finance respects. With GaleAI, the system surfaced $1.14M/year in lost revenue via coding uplift at <1% cost of recovered revenue—evidence that model-plus-workflow beats dashboards.

How an engagement runs

- Architecture & risk review (your data, workflows, claims).

- Shadow-mode pilot with audit-ready logging and kill-switch drills.

- Assistive launch for one channel/population, then phased scale-out.

- ROI pack—minutes saved, mix shift, safety profile, and payback.

If you’re evaluating partners, sanity-check us against what matters: LLM/RAG competence in healthcare, FHIR/HL7 depth, HIPAA-first pipelines, and a track record of shipping AI that clinicians keep using. Whether you’re considering AI triage for existing apps or scoping net-new workflows, we’re the healthcare app developers who do that—and we’re happy to start with a 30-minute architecture review. Schedule a 30-minute review and we’ll blueprint a safe, explainable triage flow your clinicians will actually use.

FAQ

Do we need a big LLM to do triage well?

No. Start with rules for red/amber flags and a small supervised model for risk scoring. Use an LLM only to summarize messy narratives into structured fields, not to diagnose. Keep it assistive and explainable so clinicians can review and overrule quickly.

How do we stay out of FDA SaMD territory?

Keep outputs transparent and non-determinative, require professional review before action, and present clear rationale. If a triage suggestion drives diagnosis or treatment without independent review, you’re entering SaMD and need validation evidence, change control, and post-market surveillance.

What's the minimum data to ship a reliable pilot?

Structured symptom intake plus a short free-text note, active problems and meds/allergies, the last few encounters, and 7–14 days of vitals if devices are trustworthy. Map only what you’ll act on to FHIR, normalize units and timestamps, and skip fields that won’t change routing.

Where does ROI usually show up first?

After-hours and portal intake cohorts. Measure cost per triage against staff minutes saved, watch disposition mix shift to self-care or async routes, and track safety signals and override reasons. If payback doesn’t improve after two to three sprints, fix data quality or integration gaps before scaling.

Build, buy, or hybrid for a first deployment?

Hybrid wins most often. License a reasoning core to move fast, wrap it with your intake UX, FHIR/PM adapters, safety gates, and audit trail, then replace black-box pieces with your own models where it actually improves cost, control, or performance.