In this blog, we’d love to talk about the different approaches to developing a mental chatbot. Read on to learn how to create a therapeutic bot that could really help your patients instead of telling them to kill themselves (more on that below). We’ll discuss some of the best practices and various tools at your disposal.

Top Takeaways:

- Both patients and therapists agree that mental health chatbots could help clients better manage their own mental health. At the same time, no chatbot can replace real-life human interactions with a healthcare professional.

- The two main approaches to developing a therapeutic chatbot include goal-oriented and open-ended models, with correspondingly guided and free-flow dialog patterns.

- Some of the best tools for building and maintaining a mental health chatbot are DialogFlow and Rasa.

Table of Contents:

- What is a Mental Health Chatbot?

- Mental Chatbot Benefits

- How Do Mental Health Bots Work?

- Latest NLP Developments Concerning Chatbots

- What Tools Can We Use to Build a Mental Chatbot?

- Examples of Successful Mental Health Chatbots

What is a Mental Health Chatbot?

Chatbots are computer programs using AI technologies to mimic human behaviors. They can engage in real-life conversations with people by analyzing and responding to their input. Chatbots use contextual awareness to understand the user’s message and provide an appropriate response.

Mental health chatbots provide self-assessment and guidelines for helping people overcome depression, stress, anxiety, sleep issues, etc.

Software surfacing a chatbot’s intelligence to users can take many forms:

- custom mental health mobile app

- instant messenger

- avatar-based online experience

The form you choose for a mental bot will mostly depend on how you envision your patients interacting with the program.

Mental Chatbot Benefits

Why is there a need for mental chatbots? What are the key factors for therapists to develop a mental health chatbot and for patients to use one?

Accessibility

First of all, chatbots help relieve barriers associated with the stigma of accessing psychological health services and geographical barriers in face-to-face counseling. They provide instant information 24/7 and serve exceptionally well for those working unconventional shifts.

Users enjoy the anonymity offered by chatbots, as they may be more likely to disclose sensitive information to a chatbot than to a human therapist (according to a recent study).

Cost savings

Mental chatbots also offer cost savings in the form of travel expenses and telephone charges for patients. As for practitioners, they can focus on people in critical condition and work with health data uncovered by AI, which implies more efficiency.

Read more on chatbot development cost

COVID-19 put therapeutic chatbots on a map

The COVID-19 pandemic really put chatbots in the spotlight. The pandemic exacerbated the need for mental health advisors, who were rarely available due to the sudden inflow of patients.

As it turned out, front-line providers are not trained well enough to provide emergency psychological support. According to a 2021 national survey, 22% of adults used a mental health chatbot, and 47% said they would be interested in using one if needed.

Among the above demographic, 60% said they began to use during COVID-19, and 44% said they use chatbots exclusively, i.e., without talking to a human therapist.

What is the evidence to support AI efficacy for mental health overall?

In the short term, chatbots can mitigate the psychological harm of isolation and enable people to disclose their concerns. In addition, receiving positive, emotional support can positively affect patients’ mental health.

For those who used chatbots, most respondents had a positive experience. However, only a few professionals and experts used them. Still, according to a recent survey, most healthcare professionals agree that chatbots can be helpful in mental care and think they are relatively important.

Related: Machine Learning App Development Guide

How Do Mental Health Bots Work?

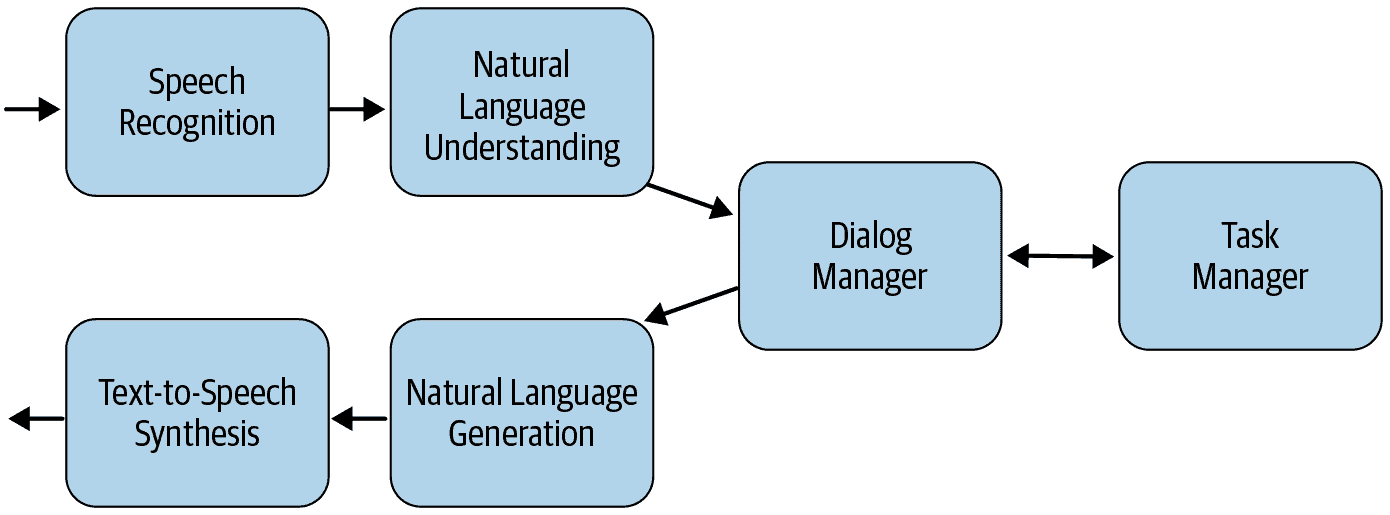

Here is a high-level overview of a simple chatbot architecture — the pipeline for a dialog system:

- Speech Recognition

Depending on how the chatbot is set up, we use off-the-shelf algorithms that transcribe user input into natural text. For text-based chatbots, this step would be excluded.

- Natural Language Understanding

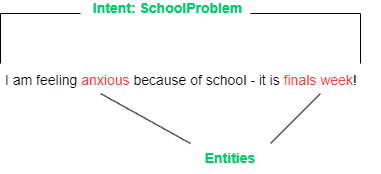

This step aims to process the user input using natural language processing. In a nutshell, the system tries to grasp the user’s intent and emotional state (sentiment analysis). The software also tries to extract various entities (parameters / attributes) relating to the user’s input.

- Dialog and Task Manager

This module tries to control the flow of dialogue. This is based on the different pieces of information that are stored throughout the conversation. In other words, the dialogue manager develops a strategy (or rules) to effectively navigate the dialogue based on user input information and the overall context.

- Natural Language Generation

The dialog manager decides how to respond to the user, and the natural language generation module creates a response in a human-friendly format. Responses can be predefined or have a more free form.

- Text-to-Speech Synthesis

As an optional step, the speech synthesis module converts the text back to speech so the user can hear it.

Latest NLP Developments Concerning Chatbots

State-of-the-art NLP algorithms typically use large neural networks (so-called deep learning) to learn the nuances of language and to perform complex tasks with minimal intervention (i.e., natural language understanding and natural language generation).

Here are the main models used in such linguistical neural networks:

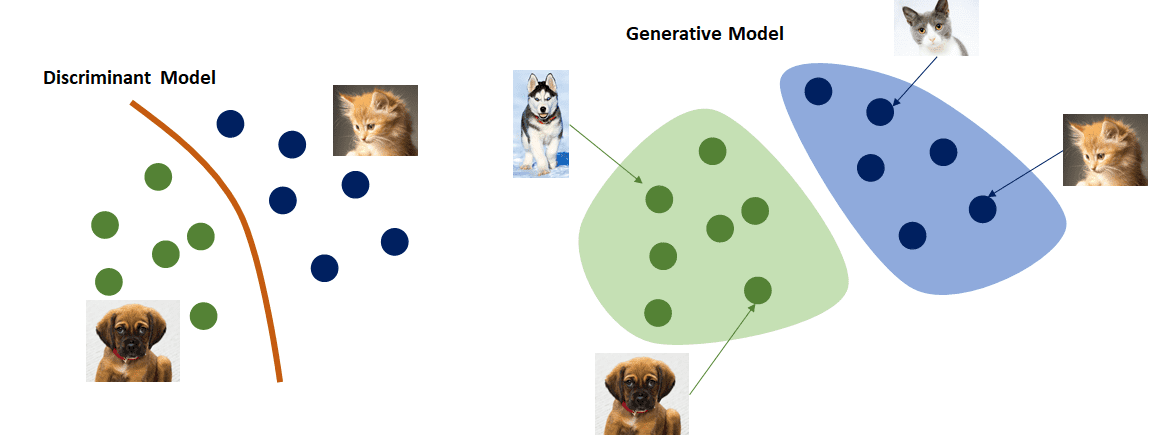

Generative model

Allow you to train a model to generate (output) results that have similar characteristics to what has been trained on the dataset.

Example: If you train the model with sufficient “therapy” data, the implication is that the model will act in ways that are statistically consistent with what a “therapist” would say

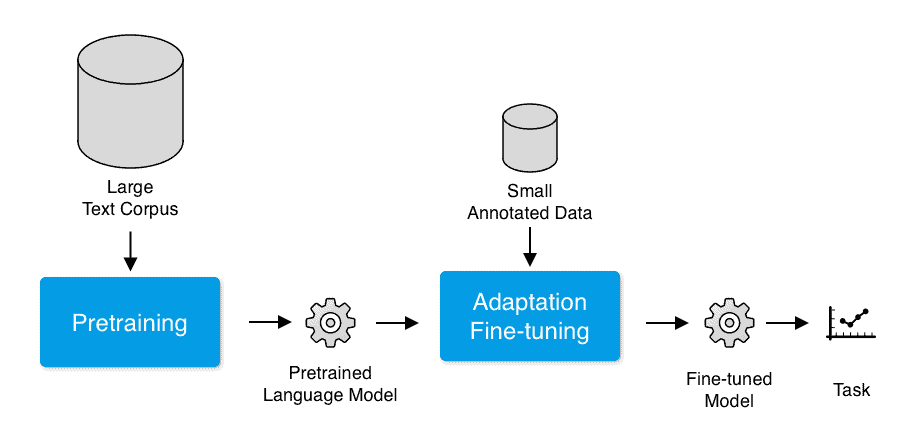

Pretrained Model

You train the model on a much more general data corpus and then apply a smaller annotated data set so that the model can be fine-tuned based on the specifics of your problem.

Transformer Model

Neural network model that tries to take into account how the words are used in context (instead of just one word at a time). This model can track the relationship between words in a sentence and decide what parts of the word sequence are worth attention.

What Tools Can We Use to Build a Mental Chatbot?

What tools would be most appropriate in building a mental health chatbot? It depends on a number of factors, including but not limited to:

- Whether the conversation is goal-oriented, or whether we want it to be more open-ended?

- If the conversation is goal-oriented, do we already have the conversation flow mapped out?

- How much real-life conversational data do we have on hand?

- What general features are we looking for? Do these features come right out-of-the-box, or do they have to be custom-made, specific for the application?

- What platforms do we want to integrate the chatbot with (e.g., mobile application, web application, messenger, etc.)?

Some chatbot-building systems, for example, DialogFlow, come with certain integrations out of the box.

- Do we need such features as speech-to-text (STT) or text-to-speech (TTS)?

- Does the chatbot need to support multiple languages?

- Does the chatbot need to make backend API calls to use collected or external data?

- What type of analytics do we plan to collect?

Goal-oriented therapeutic bot building

Consider using a goal-oriented chatbot if you want to guide your patient through a fixed set of questions (or procedures) during a counseling session. You should also consider this approach if you have very limited conversational data or need explicit guardrailing to frame how the conversation would flow.

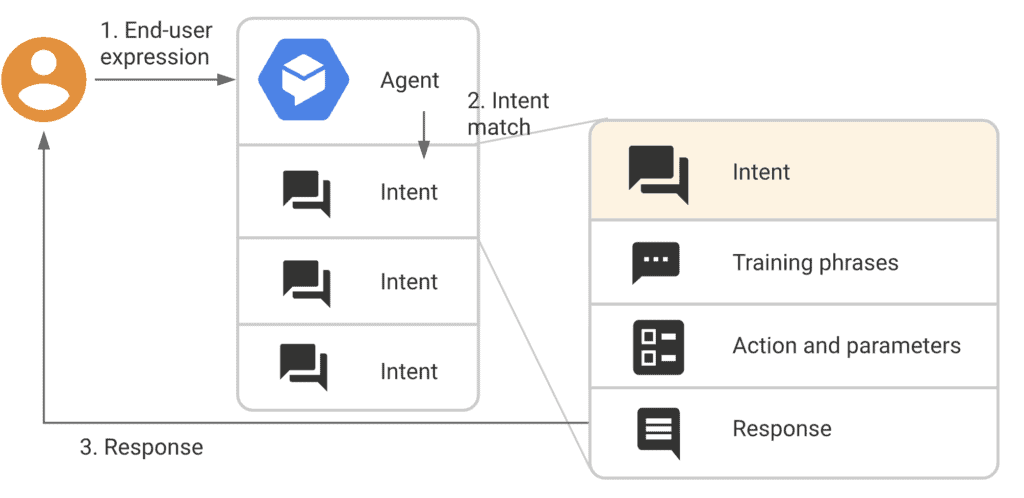

DialogFlow

DialogFlow is one of the most popular dialog-building cloud platforms by Google. We can use DialogFlow’s web user interface to start with development right away (min initial coding or installation required). Then, for more advanced scenarios, they provide the DialogFlow API to build advanced conversational agents.

DialogFlow ES

In general terms, we map out how a potential conversation can unfold. DialogFlow then uses NLP to understand the user’s intent (and collect entities) and, based on that, makes appropriate responses.

Also Read: How to Develop a Natural Language Processing App

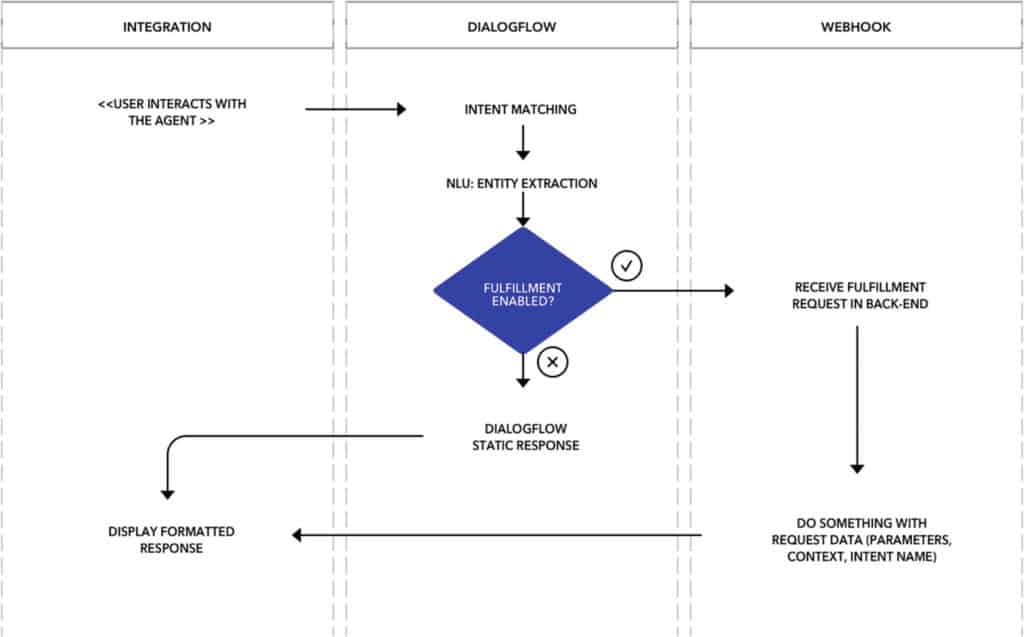

Replies are generally static (i.e., based on predefined options). However, responses can be made more dynamic by calling the platform’s backend APIs with webhook requests. Here’s how DialogFlow works in the back end:

(image credit: Lee Boonstra, from “The Definitive Guide to Conversational AI with Dialogflow and Google Cloud”)

Read more on conversational AI in healthcare.

I’d like to emphasize that the platform has the most necessary features out of the box, but the trade-off is that DialogFlow is a closed system and requires cloud communications. In other words, we can’t operate it using on-premise servers.

Some of the highlight features that come off-the-shelf include:

- support for speech-to-text and text-to-speech

- options to review and validate your chatbot solution

- integrations across multiple platforms

- built-in analytics

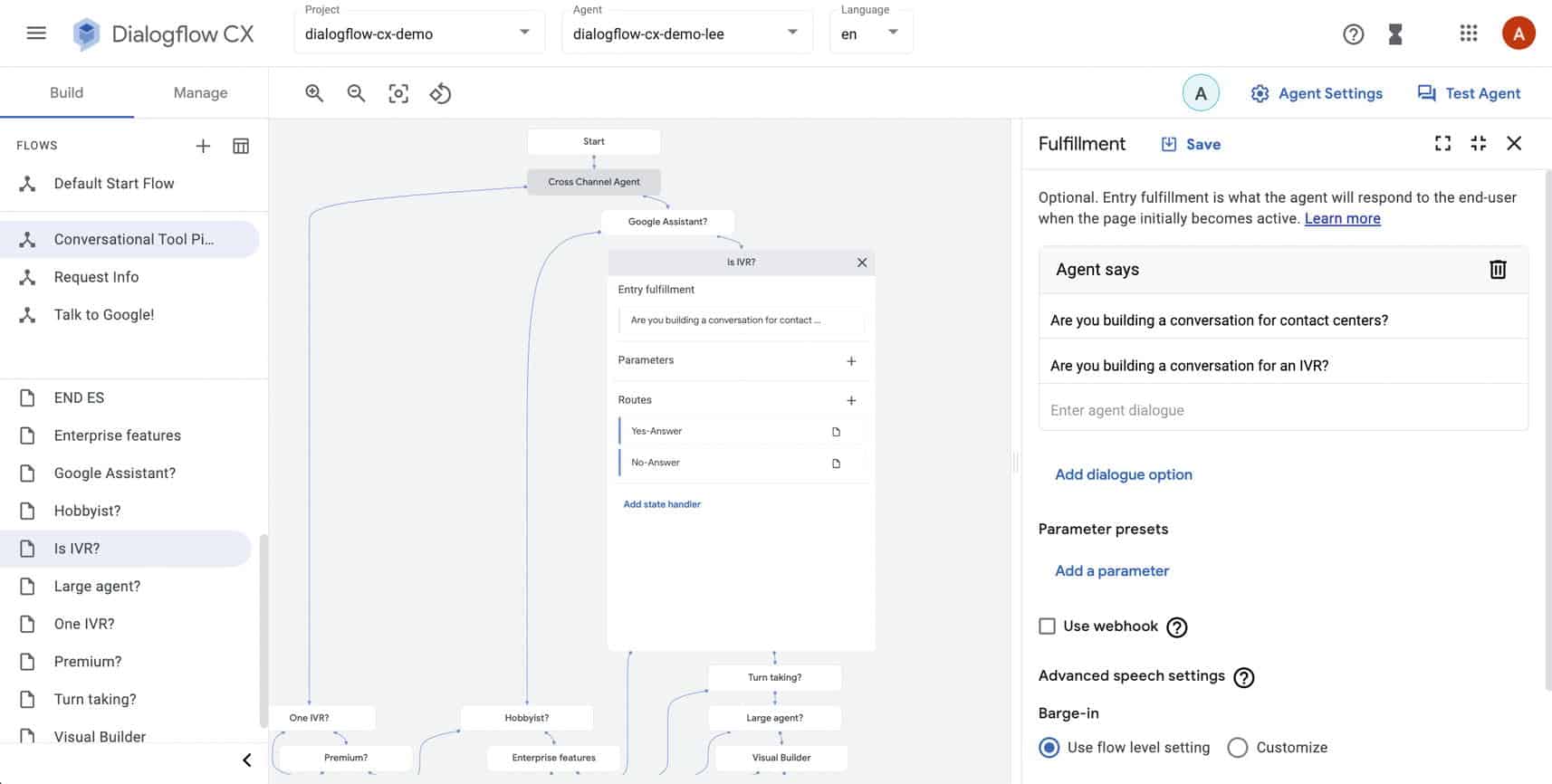

DialogFlow CX

Whereas DialogFlow ES should be reserved for simple chatbots (i.e., if you are a startup or small business), we recommend using DialogFlow CX for more complex chatbots or when developing an enterprise application. The core principles behind ES still apply to CX.

Here’s how DialogFlow CX helps you build a more advanced mental health chatbot:

- supports large and complex flows (hundreds of intents)

- multiple conversation branches

- repeatable dialogue, understanding the intent and context of long utterances

- working with teams collaborating on large implementations

Rasa

In general, Rasa would be used in scenarios where customization is a priority. The trade-off behind this customizability is that more tech knowledge (e.g., Python) is required to use these components. In addition, it requires more upfront setup (including installation of multiple components) compared to using DialogFlow (minimal or no upfront setup).

You should also keep in mind that Rasa has no or minimal user interface components that come out of the box.

Rasa has two main components: Rasa NLU and Rasa Core

Rasa NLU

Rasa NLU is fundamentally similar to how DialogFlow ES works. The platform can match user intents and collect entities / parameters during conversations. The natural language understanding gets better with more conversation data being gathered and analyzed.

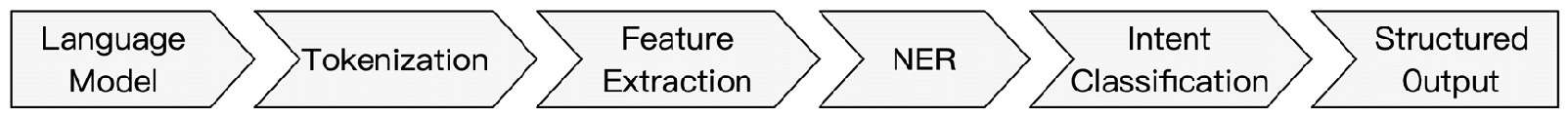

The NLU components are designed with customization in line when building the pipeline: we can swap out components at different stages depending on the use-case or application needs:

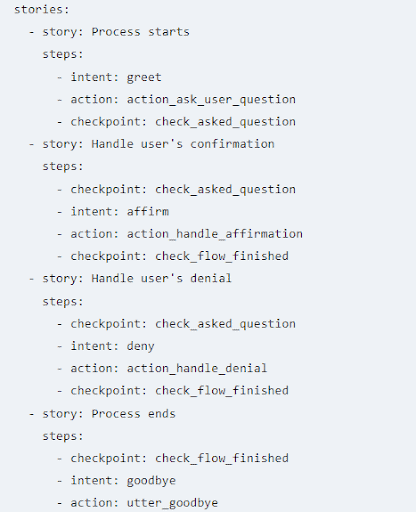

Rasa Core

Rasa Core has a more sophisticated dialog management system compared to DialogFlow. The dialogue in Rasa is more explicitly driven based on stories, which organizes the order of what intent, action, and parameters are collected during model development. It is also possible to weave multiple stories together using custom logic.

Since Rasa is a code-first framework, there is a greater degree of flexibility on how you can design conversations compared to DialogFlow. Generally, responses are static (predefined options) but can be made more dynamic using API calls.

Here are some additional features you can expect from Rasa:

- more flexibility in importing training data across multiple formats

- open-source libraries

- hostable on your own servers

- Rasa Enterprise includes analytics

Also, note these features that the Rasa platform lacks:

- no hosting provided

- no out-of-box integrations

- no speech-to-text or text-to-speech (optionally, third-party libraries)

Open-ended therapeutic bot building

We recommend creating an open-ended chatbot if you want conversations to be more in the form of chit-chatting (i.e., an AI therapist providing open-ended, empathetic conversations for those going through a rough time).

It’s strongly recommended that you have some conversational data to work with to fine-tune the model and minimize the risk that a transformer model (more on that below) will output inappropriate responses.

Fortunately, the GPT-3 and BlenderBot platforms work decently out of the box for creating open-ended dialog agents, but you get the most out of either model with fine-tuning. That’s necessary to minimize the risk of inappropriate responses.

GPT-Based Models

GPT-3 uses a transformer model that is trained on large text corpora across the internet. In terms of training data, GPT-3 models are trained on a variety of text such as Wikipedia, corpora of books, and various web pages (for example, high-quality ones like WebText2 and petabytes of data such as Common Crawl).

GPT-3 is considered a game-changer in the field of NLP AI in that it can be used for a large number of NLP tasks:

- text classification

- text summarization

- text generation, etc. instead of just one specific task

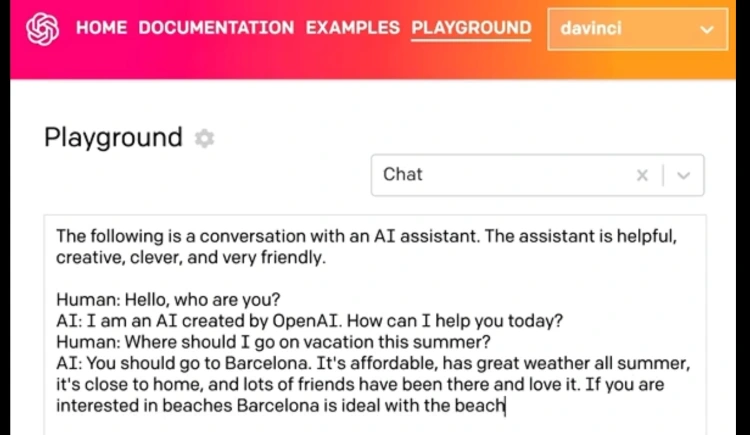

Fundamentally, OpenAI’s GPT-3 changes how we think about interacting with AI models. The OpenAI API has drastically simplified interaction with AI models by eliminating the need for numerous complicated programming languages and frameworks.

The quality of the completion you receive is directly related to the training prompt you provide. The way you arrange and structure your words plays a significant role in the output. Therefore, understanding how to design an effective prompt is crucial to unlocking GPT-3’s full potential.

GPT-3 shines best when you:

- Supply it with the appropriate prompt

- Fine-tune the model based on the specifics of the problem at hand

Related: Medical Chatbots: Use Cases in the Healthcare Industry, How To Build Your Own ChatGPT Chatbot, How is ChatGPT Used in Healthcare

Also, please note some of the drawbacks when considering GPT-3 for making a mental health chatbot:

- AI bias

Since GPT-3 is trained on large amounts of data, there’s a risk of it using toxic language / saying wrong things (i.e., that are reflective of the dataset being used for training). For example, here’s a story where a GPT-3 based bot agreed that a fake patient should kill themselves. Fortunately, this issue can be addressed through careful fine-tuning (i.e., retraining the model on focused datasets so that it can emulate proper conversation behavior).

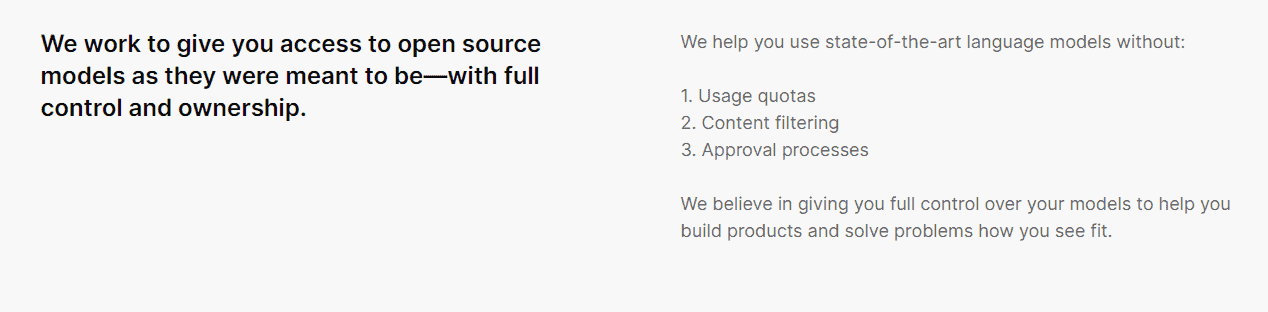

- OpenAI has a tendency to restrict API access for higher-risk applications. You’ll need permission to use their GPT-3 technology in a live app.

Consider online platforms as GPT alternatives, such as Forefront, that allow you to leverage their computational resources with ease / minimal restrictions if the above poses an issue.

Here’s a prototype of therapeutic chatbot we put together at Topflight, using a GPT-3 model:

BlenderBot

BlenderBot is a chatbot-building platform by Facebook. Its transformer model was trained on large amounts of conversational data.

BlenderBot’s main selling points include optimizations to have a personality, be empathetic, show engaging use of knowledge based on a tailored dataset, and seamlessly blend all these qualities.

Facebook strives to keep the system adaptable to scenarios when the person’s tone changes from joking to serious. Therefore, later versions of BlenderBot encode the information retrieved during convos into long-term memory (i.e., use a dedicated training set and multi-chat session). In addition, the platform can search for info on the internet and integrate that knowledge into conversations in real time.

The most accessible approach for creating a BlenderBot-based agent is to use an online service like Forefront. Alternatively, BlenderBot APIs can be found here for offline fine-tuning. However, the computational resources to run these models are very demanding. I’d highly recommend going with an online option.

Examples of Successful Mental Health Chatbots

Let’s review some successful mental chatbots out there.

Replika

Replika is an AI personal chatbot companion developed in 2017 by Kuyda. The app’s purpose is to allow the user to form an emotional connection with their personalized chatbot and develop a relationship unique to the person’s individual needs.

How does Replika work?

It was initially entirely programmed based on scripts that the engineer had to work with. However, gradually, the team became more reliant on using a neural network (their own GPT-3 model) as they gathered more conversation data from end users. As a result, their system now uses a mix of scripted and AI responses.

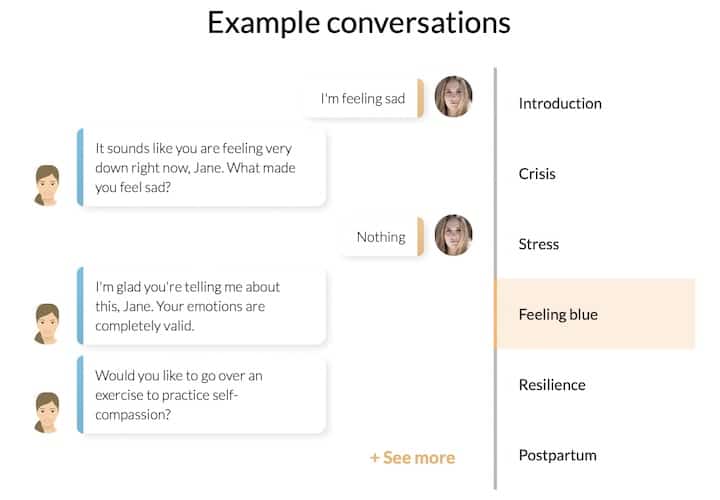

Woebot

Developed in 2017 at Stanford by a team of psychologists and AI experts, uses NLP and CBT (cognitive behavioral therapy) when chatting with the user about how his or her life has been over a ten-minute (max) conversation.

Keeps track of all texts and emojis, so responses become more specific over time (referencing previous conversations).

Early results show that college students who interacted with Woebot had reduced the symptoms of depression significantly in two weeks.

Raised over 90 million dollars in series B funding as of June 2021 and received FDA breakthrough device designation in May 2021 to treat postpartum depression.

Wyza

Developed in 2015 in India to facilitate counseling based on the principles of CBT and mindfulness → generally available through individuals under a freemium model and through employer benefit programs. The chatbot recommends specific exercises based on the user’s ailment.

Tries to address a need for mental health triaging in low-income countries (shortage of mental health specialists) and high-income countries (6-12-month waitlists before a patient gets help).

The target demographic is generally those in the “missing middle,” where mindfulness apps are not enough but are not in need of psychiatric or medical evaluation.

Wyza was admitted into the FDA Breakthrough Device in 2022 Designation. Clinical trials show that it is “just as comparable to in-person psychological counseling.”

X2AI

AI-powered mental health chatbot written by psychologists. The bot provides self-help chats through text message changes, similar to texting with a friend or a coach.

Over 29 million people have paid access (check their website).

In 2016, they started using these chatbots to provide mental support for refugees during the Syrian war.

X2AI also aims to target veterans and patients with PTSD. There is a treasure trove of research that shows that their chatbot has reduced symptoms of depression.

Raised series A funding (undisclosed) in 2019.

Also Read: How to build a chatbot

If you want to discuss your therapeutic chatbot idea with a company that prioritizes your business’s growth besides product development, contact us here.

[This blog was originally published on 8/31/2022 and has been updated for more recent content]

Frequently Asked Questions

What os a mental chatbot?

An AI assistant that’s not meant to replace a human therapist but can provide initial advice by gathering patient data and providing them with replies.

What tools do you recommend for creating a chatbot?

DialogFlow, Rasa, GPT-2.

How long does it take to develop a mental health chatbot?

Around 3 months for the initial MVP version.