In this blog, we go over the different approaches to developing a mental chatbot. Read on to learn how to create a therapeutic bot that’s actually helpful in the real world—grounded in best practices, safety guardrails, and the right tools. We’ll discuss some of the best practices and various tools at your disposal.

Top Takeaways:

-

- Both patients and therapists agree that mental health chatbots could help clients better manage their own mental health. At the same time, no chatbot can replace real-life human interactions with a healthcare professional.

- The two main approaches to developing a therapeutic chatbot include goal-oriented and open-ended models, with correspondingly guided and free-flow dialog patterns.

- Some of the best tools for building and maintaining a mental health chatbot are DialogFlow and Rasa.

Table of Contents:

- What is a Mental Health Chatbot?

- Why Build a Mental Health Chatbot in 2026?

- How Do Mental Health Bots Work?

- Safety and Ethical Considerations

- Regulatory Compliance Guide: HIPAA, FDA, and State Rules

- The Tech Stack: Generative AI & RAG in 2026

- What Tools Can We Use to Build a Mental Chatbot?

- Which Mental Health Chatbot Platform Should You Choose?

- 12-Week Mental Health Chatbot Development Plan

- Examples of Successful Mental Health Chatbots

- Monetization Models for 2026

- What Does a Mental Health Chatbot Cost to Build?

What is a Mental Health Chatbot?

Chatbots are computer programs using AI technologies to mimic human behaviors. They can engage in real-life conversations with people by analyzing and responding to their input. Chatbots use contextual awareness to understand the user’s message and provide an appropriate response.

Mental health chatbots provide self-assessment and guidelines for helping people overcome depression, stress, anxiety, sleep issues, etc.

Software surfacing a chatbot’s intelligence to users can take many forms:

- custom mental health mobile app

- instant messenger

- avatar-based online experience

The form you choose for a mental bot will mostly depend on how you envision your patients interacting with the program.

Why Build a Mental Health Chatbot in 2026? (Trends & ROI)

If you run (or are building inside) a therapist practice, a mental health chatbot isn’t about replacing face-to-face counseling. It’s about removing friction around care access, routing the right people to the right level of support, and keeping your clinicians focused on work that actually requires a human.

The benefits of mental health chatbots show up in the unglamorous places that make or break a practice: easier access to psychological health services, smoother triage, better continuity between sessions, and clearer ROI across operations and outcomes.

Below are the three shifts making therapeutic chatbots and mental health bots a practical ROI play in 2026.

The Shift to “Hybrid Care” Models (AI + Human Handoff)

The winning model in private practice isn’t “AI therapist.” It’s hybrid care: a therapeutic chatbot handles the repetitive front-door work, and your team takes over when the situation needs clinical judgment.

Where this pays off fast:

-

Accessibility without adding staff. A bot can provide instant information 24/7, which helps clients who work unconventional shifts, can’t find availability, or are stuck behind geographical barriers (especially where face-to-face counseling is limited).

For many clients, a chatbot is the first frictionless entry point into psychological health services—especially when schedules, distance, or stigma get in the way. Done right, that front-door support improves efficiency for your team because fewer appointments start with basic admin and more begin with a clean clinical summary.

-

Lower stigma, more disclosure. Clients often feel more comfortable starting with a chatbot because of perceived anonymity, and may be more willing to disclose sensitive information early (you’ll see this pattern show up across more than one recent study).

Practically speaking, when clients use chatbots to get started, they arrive to the first session less “cold”—with fewer unanswered basics and more clarity about what they want help with.

-

Cleaner intake and better routing. A bot can do self-assessment prompts, collect basics, and summarize what matters—so your practitioner isn’t burning 15 minutes on admin before therapy even starts.

The key is the handoff: the bot should collect and summarize, then route to a human therapist when it hits your “needs a clinician” threshold.

Read more on chatbot development cost

24/7 Crisis Triage & Suicide Prevention Protocols

If your chatbot is allowed anywhere near mental health, you need safety protocols that are boring, explicit, and consistent (that’s a compliment). In practice, this means crisis triage is not “the bot says something empathetic.” It’s a workflow.

What to build for a private practice context:

-

Early risk detection + deterministic escalation. Use a combination of keyword/intent patterns and simple severity scoring to spot high-risk conversations and trigger an escalation path immediately—no debate loops.

-

Human handoff that actually works at 2 AM. Define your after-hours routing (on-call, answering service, warm transfer to appropriate resources) so the bot doesn’t become your accidental night shift.

-

Documentation and auditability. Your practice needs a clear record of what the system did, when, and why—especially if the client later disputes guidance. (This is where “health data” handling and logging discipline matter.)

This is also where US-only reality kicks in: your protocols and disclaimers should match your practice’s jurisdictional obligations, not generic internet advice.

Solving the Therapist Shortage (Provider Burnout)

In 2026, the therapist shortage problem shows up in your calendar, not in your mission statement: waitlists, late cancellations, and clinicians spending energy on low-value admin.

In other words, you’re not trying to turn software into mental health advisors—you’re giving clients a reliable first step and letting clinicians stay clinicians. As more people use chatbots for simple guidance and next-step routing, your practice can reserve human time for the moments where it matters most.

Mental health chatbots can reduce load in very specific ways:

-

Pre-session and between-session support. A bot can deliver lightweight check-ins, reminders, and structured exercises—often providing positive emotional support—so the next session starts with signal, not recap.

-

Protect clinician time for people in critical condition. If your clinicians are spending prime time answering routine questions, you’re under-using your most expensive resource. A bot can handle the FAQ layer and triage.

-

Operational cost savings. For clients: reduced travel expenses and (yes, still) telephone charges in some workflows. For your practice: fewer wasted slots, fewer repetitive admin minutes, and better utilization.

If you want numbers, you’ll typically see ROI driven by a mix of: reduced admin time per client, improved engagement/retention, fewer no-shows, and increased throughput without extending clinical hours.

What Is the Evidence to Support AI Efficacy for Mental Health Overall?

The evidence story is still “promising, not magical.” The best-supported outcomes tend to be modest but real: structured check-ins, coping prompts, and consistent follow-through that can positively affect patients’ mental health when paired with clear escalation and human oversight.

In the short term, chatbots can help mitigate the psychological harm of isolation, support disclosure of concerns, and deliver structured coping techniques. Many users report a positive experience, and surveys commonly find that healthcare professionals view chatbots as potentially helpful in mental care (see this recent survey).

Related: Machine Learning App Development Guide

How Do Mental Health Bots Work?

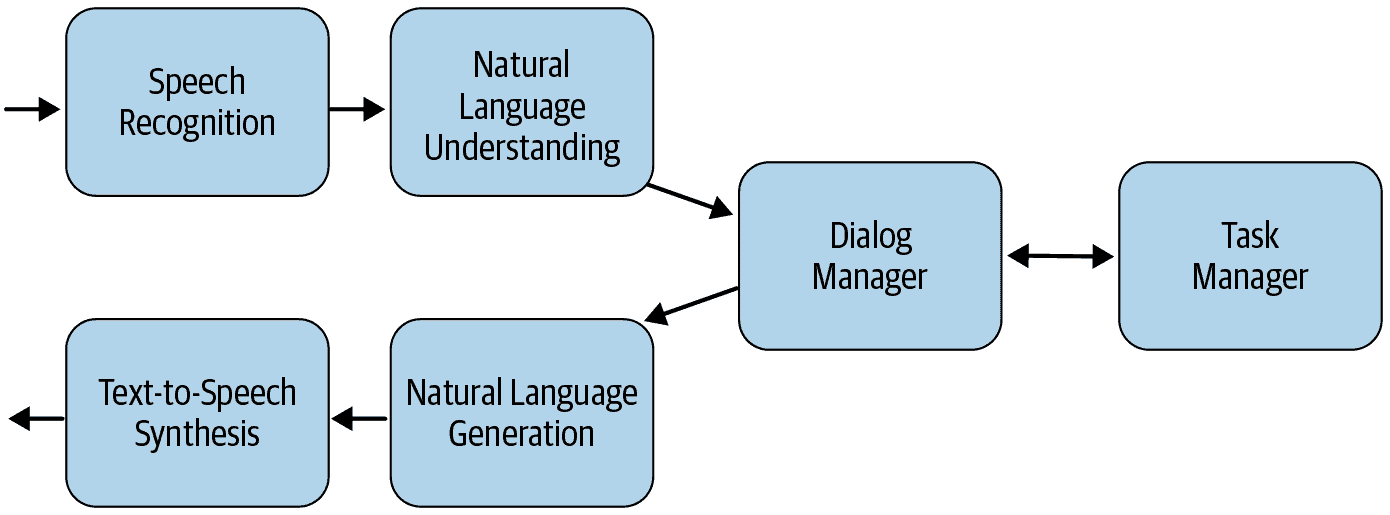

Here is a high-level overview of a simple chatbot architecture — the pipeline for a dialog system:

- Speech Recognition

Depending on how the chatbot is set up, we use off-the-shelf algorithms that transcribe user input into natural text. For text-based chatbots, this step would be excluded.

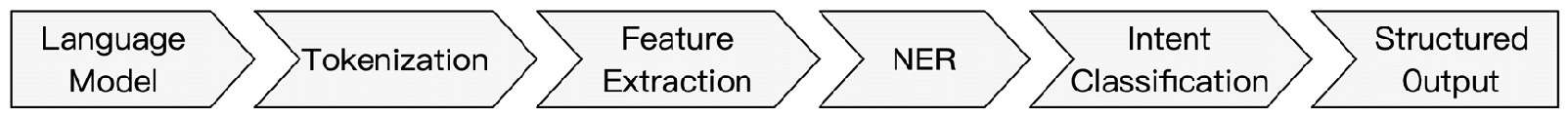

- Natural Language Understanding

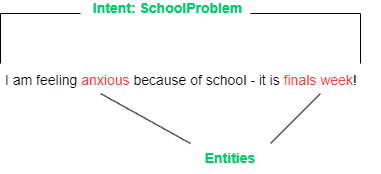

This step aims to process the user input using natural language processing. In a nutshell, the system tries to grasp the user’s intent and emotional state (sentiment analysis). The software also tries to extract various entities (parameters / attributes) relating to the user’s input.

- Dialog and Task Manager

This module tries to control the flow of dialogue. This is based on the different pieces of information that are stored throughout the conversation. In other words, the dialogue manager develops a strategy (or rules) to effectively navigate the dialogue based on user input information and the overall context.

- Natural Language Generation

The dialog manager decides how to respond to the user, and the natural language generation module creates a response in a human-friendly format. Responses can be predefined or have a more free form.

- Text-to-Speech Synthesis

As an optional step, the speech synthesis module converts the text back to speech so the user can hear it.

Safety and Ethical Considerations

If your bot is allowed anywhere near mental health, safety isn’t a “nice to have.” It’s the product. The rest is UI.

You’re building a system that will sometimes meet users on their worst day. That means you need two things at all times: (1) early detection when the conversation is becoming unsafe, and (2) a deterministic way to get a human (or emergency resources) involved—without relying on the bot to “say the right thing.”

Safety Protocols & Crisis Escalation Logic

A safety-first mental health chatbot isn’t “an LLM with a disclaimer.” It’s a layered system where the model is one component—and it loses control the moment risk goes up.

A simple reference architecture looks like this:

1) Intake → Risk Signals

– Run lightweight detection on every turn: keyword hits, patterns (intent + phrasing), and simple sentiment/urgency signals.

– Goal: catch indirect distress and “coded” language, not just obvious phrases.

2) Policy Gate (Deterministic Overrides)

– Before any response is generated, route the message through a rules/policy layer.

– If high-risk conditions are met, the bot does not “think harder.” It switches modes.

3) Response Mode Selection

– Normal mode: the LLM can respond, but only within allowed boundaries.

– Restricted mode: the LLM is constrained to a narrow set of safe responses (grounded, non-clinical, no diagnosis).

– Escalation mode: the LLM is bypassed and you show a hard-coded crisis resources card and a clear next step.

4) Human-in-the-Loop Handoff

– For practice-owned bots: escalation may route to a live therapist, an on-call workflow, or a warm transfer to your practice’s support channel.

– For public-facing bots: escalation often means a structured handoff (schedule/callback flow) plus emergency resources when appropriate.

– Design principle: no bargaining, no “are you sure?” loops, no delays.

5) Guardrails Against Unsafe Output

– Add an “anti-hallucination” layer: validators and content filters that block disallowed categories (e.g., diagnosis, medication recommendations, or content that reinforces delusional beliefs).

– This can be implemented via vendor guardrails frameworks (e.g., NVIDIA NeMo Guardrails) or application-level validators (e.g., LangChain-style output checks), but the idea is the same: enforce policy at runtime, not by hope.

6) Logging + Review Hooks

– Keep session transcripts for clinical review only if you can secure them: strong access controls, minimal retention, strict permissions, and audit trails for access.

– Log every escalation event (what triggered it, what happened next) so you can run incident triage and improve the rules.

Critical Safety Features

- Crisis detection that’s more than a keyword list. Start with keywords, yes—but back it with patterns (intent + phrasing) and simple sentiment/urgency signals so you’re not blind to “coded” language or indirect distress.

- Automatic escalation to human support. Define triggers and route users to a real person (or a real-time support workflow) fast. No bargaining, no “are you sure?” loops, no delays.

- Disclaimers that users actually read. Put limitations where decisions happen (first use, and before sensitive flows), in plain language: what it can do, what it can’t, and what to do in emergencies.

- Crisis hotline integration. Make emergency options one tap away in any “unsafe” path, not buried in a footer. If you operate across regions, don’t hardcode a single number—use location-aware routing or a clear regional selector.

- Session transcripts for clinical review (with strong access controls). Logs are how you improve safety, catch failure modes, and prove oversight. But treat transcripts as sensitive by default: minimal retention, strict permissions, and audit trails for access.

Ethical Guidelines

- Never diagnose or prescribe. Your bot can reflect, validate, and suggest next steps. It cannot label conditions, recommend meds, or position itself as clinical authority.

- Always identify the bot clearly. No “I’m your therapist” cosplay. Users should understand they’re interacting with software—every time.

- Privacy by design (HIPAA where applicable). If there’s any chance you’re handling PHI, architect for HIPAA-grade controls from day one: least-privilege access, encryption, auditing, and vendor agreements where required.

- Informed consent isn’t a checkbox. Make consent explicit and specific: what data you collect, how it’s used, who can see it, and how to revoke access or delete data.

- Regular clinical oversight. A mental health chatbot without ongoing review is basically “ship and pray.” Set a cadence for clinician review, incident triage, and content updates—especially after edge-case failures.

A useful gut-check: if you can’t explain your escalation rules, your data boundaries, and your review process in one page, you’re not “being thorough.” You’re hiding the sharp edges.

Regulatory Compliance Guide: HIPAA, FDA, and State Rules

A mental health chatbot can be “just a wellness app”… right up until you store something that looks like PHI for a covered entity, or you market it like it treats a condition. From there, compliance stops being a slide in a pitch deck and becomes an engineering constraint (data flows, logs, vendor contracts, incident response). This guide is meant to fit the same practical, implementation-heavy tone as the rest of the breakdown.

The fastest way to get oriented is to classify your bot across three axes:

- Who you sell to (consumer vs provider/payer/employer),

- What data you touch (PHI / “consumer health data” / both),

- What you claim it does (wellness support vs clinical treatment/diagnosis).

Once you’re honest on those three, the checklist below gets a lot less theoretical.

Mental Health Chatbot Compliance Checklist

HIPAA Requirements (when you handle ePHI for a covered entity)

Security controls (don’t hand-wave these):

- ☐ Access control: unique user IDs, role-based access, emergency access procedure, automatic session timeout

- ☐ Audit controls: log access and activity in systems that contain/use ePHI; make logs tamper-evident and reviewable

- ☐ Integrity controls: protect ePHI from improper alteration/destruction (checksums, write-once logs where appropriate)

- ☐ Authentication: “person or entity” authentication (MFA for admins; strong auth for users where risk warrants)

- ☐ Transmission security: encryption in transit + controls against unauthorized network access

- ☐ Encryption at rest with key management (KMS/HSM where possible), plus backup encryption

Process + governance (the part teams “forget” until a buyer asks):

- ☐ BAAs with every vendor that touches ePHI (cloud, analytics, support tools, LLM/API providers if applicable)

- ☐ Risk analysis + risk management plan (HIPAA Security Rule expects a risk-based approach; “flexibility of approach” doesn’t mean “no controls”)

- ☐ Minimum necessary + data minimization: collect only what the flow truly needs; avoid free-text when structured inputs will do

- ☐ Retention + deletion policy (and technical enforcement): transcripts, metadata, backups, and exports

- ☐ Breach/incident response runbook (and an on-call owner), plus regular tabletop drills

Practical tip: if you keep transcripts for clinical review, treat them like radioactive material—tight access, short retention, explicit purpose, and audit trails for every view/export.

FDA Considerations (when claims or functionality drift toward “medical device”)

FDA scrutiny is driven by intended use and claims. If you imply your chatbot diagnoses, treats, or prevents a disease (or functions like a digital therapeutic), you may be in SaMD territory. The FDA uses the IMDRF SaMD framing: software intended for a medical purpose that performs that purpose without being part of a hardware medical device.

Low-regret guardrails (even if you’re trying to stay “wellness”):

- ☐ No diagnostic or prescriptive claims in marketing, onboarding, or in-chat copy

- ☐ Clear limitations (what it can do vs cannot do) where users make decisions

- ☐ Escalation pathways for acute risk (and evidence you tested them)

If you are building something that may be regulated:

- ☐ Regulatory positioning memo: intended use statement + “why not a device / why a device” rationale (you’ll need this for partners and due diligence)

- ☐ Clinical evaluation plan proportional to risk (IMDRF provides a clinical evaluation framework for SaMD)

- ☐ Quality management system (design controls, requirements traceability, verification/validation, change control, supplier management)

- ☐ Post-market monitoring: complaint handling, safety signal review, and a process for field corrections

- ☐ Adverse event reporting pathway (who reviews, how fast, what gets escalated)

If you’re aiming for accelerated pathways later, understand that programs like Breakthrough Devices exist—but they’re not a substitute for evidence, QMS discipline, or a real risk-management story.

State Regulations and “Not-HIPAA” Privacy Traps (where teams get surprised)

Even if HIPAA doesn’t apply (consumer app, no covered entity relationship), you can still be regulated via state health privacy laws and the FTC.

- ☐ Telehealth / cross-state practice rules if a clinician is involved (many states expect licensure where the patient is located; validate state-by-state)

- ☐ Mandatory reporting protocols (varies by jurisdiction): define when/how escalation happens and who is responsible

- ☐ Age gating + minors handling: if directed to children under 13—or you knowingly collect data from them—COPPA triggers parental-consent obligations

- ☐ Consumer health data laws: e.g., Washington’s My Health My Data Act restricts collection/sharing/sale of “consumer health data” and requires specific consent mechanics

- ☐ FTC Health Breach Notification Rule: the FTC has explicitly pushed applicability to health apps and similar tech (important if you’re outside HIPAA but still handling health data)

- ☐ 42 CFR Part 2 awareness if you integrate with substance use disorder treatment records from Part 2 programs (stricter confidentiality rules can apply)

Compliance: Beyond Just HIPAA (AI-Era Transparency + Enterprise Trust)

Even if you’re building for the U.S. only, compliance in 2026 is no longer “HIPAA + a privacy policy.” Two forces are raising the bar: (1) AI-specific transparency/safety rules at the state level, and (2) enterprise procurement requirements that function like regulation (because employers won’t buy without them).

EU AI Act (High-Risk Categorization): If You Plan European Markets

If there’s any chance you’ll expand into Europe, the EU AI Act can change your obligations materially. High-risk systems trigger a heavier compliance package (risk management, governance, documentation, and oversight).

What this means in practice:

- Treat “high-risk or not” as a product decision you document early (and keep updated).

- Plan for a technical documentation burden. The EU’s own AI Act service desk highlights that high-risk systems must have technical documentation before market release, kept up to date, and aligned to Annex requirements.

- Expect conformity assessment thinking: risk management, data governance, transparency, human oversight, accuracy/robustness/cybersecurity, and record-keeping are the recurring themes.

Practical tip: If you’re building a mental health chatbot that profiles a user’s mental state, routes them, or influences decisions about care, assume you’ll be asked to show your risk controls and documentation—not just your model choice.

Bot Disclosure Laws (California): “Don’t Pretend You’re Human” Is Becoming a Requirement

California has started targeting “companion chatbot” platforms with AI-specific obligations. SB 243 (signed Oct 13, 2025; effective Jan 1, 2026) is designed to reduce risk of harmful interactions, especially for minors, and it includes disclosure/guardrail expectations and operational requirements (not just marketing language).

How to implement this in product terms:

- Put an “AI disclosure” at the start of chat (and repeat it in places where a user could reasonably forget).

- Publish and operationalize your safety protocol (not just a policy doc). SB 243’s text focuses on requiring operators to implement and publish a protocol and provide referrals to crisis services when certain high-risk expressions appear.

- Treat disclosure and protocol as auditable product artifacts: they should map to actual runtime behavior, logs, and review processes.

Context note: California already has an older, narrower bot disclosure law (SB 1001) aimed at preventing deception in commercial/election contexts, not healthcare specifically. It’s a good reminder that “disclose your bot-ness” has precedent and is broadening.

SOC 2 Type II: The “Enterprise Trust Gate” for Employer Deals

For B2B employee wellness, SOC 2 Type II often functions like a ticket to enter procurement. It’s not a HIPAA requirement—but employers (and their security teams) frequently treat it as the baseline proof that your controls actually operate day-to-day.

Keep it simple:

- SOC 2 Type I = controls designed at a point in time.

- SOC 2 Type II = controls tested for operating effectiveness over a period of time.

Practical tip: If you’re targeting employers, plan SOC 2 Type II early because it’s not a “weekend sprint.” It requires you to run controls consistently and collect evidence over an observation period.

What “Good” Looks Like in Practice

A compliant mental health chatbot isn’t one that has a checkbox list in Confluence. It’s one where you can answer—concisely—these questions:

- Where does sensitive data enter, where does it go, who can see it, and how long does it live?

- What exactly triggers escalation, and what happens if escalation fails?

- What claims are you making—and do your logs, QA, and clinical oversight actually support them?

If you can’t answer those questions in one page—with real owners, artifacts, and dates—you don’t have a compliance posture yet, just good intentions.

The Tech Stack: Generative AI & RAG in 2026

If you’re looking for the latest NLP technologies for mental health chatbots, the honest update is this: state-of-the-art NLP algorithms now boil down to large neural networks trained with deep learning. The core capabilities haven’t changed—natural language understanding and natural language generation—but the way you operationalize them has.

Most modern bots rely on a transformer model as the baseline (a neural network model that understands how words are used in context, using attention to decide what parts of a word sequence matter in a sentence). That foundation can produce fluent dialogue, but it doesn’t guarantee accuracy, safety, or consistency. So the differentiator in 2026 isn’t “which linguistical neural networks category you picked,” but how you ground, constrain, and audit what gets generated.

In practice, the stack looks like this:

- LLM (generation) — the generative model layer that can “talk,” driven by language modeling (the classic goal: predict the next sequence of words)

- RAG (retrieval) — reduces confident errors by grounding answers in vetted material

- Memory layer (vector DB) — creates controlled “long-term memory” via retrieval, not magical recall

- Safety + monitoring — guardrails, escalation logic, audits, and regression tests

Retrieval-Augmented Generation (RAG) for Clinical Accuracy

RAG is the most practical way to keep the bot aligned with reality without turning your team into an ML lab.

Instead of forcing the model to answer from a vague general knowledge base, you retrieve relevant snippets from your curated sources (policies, clinician-approved content, internal playbooks) and then generate a response anchored to that context.

Where this maps to the older model language:

- A pretrained model is the starting point—trained on a general data corpus (often internet-scale) and then adapted to your use case.

- You can add an annotated data set to make the behavior more domain-appropriate (this is the part teams often describe as being fine-tuned).

- A dedicated dataset and retrieval grounding can work together: fine-tuning shapes style/behavior; RAG supplies facts and protocol text at runtime.

Implementation details that matter in mental health products:

- Store content in small chunks with metadata (topic, jurisdiction, last-reviewed date).

- Use hybrid retrieval (semantic + keyword filters) to avoid “close but wrong” matches.

- Add reranking to improve precision.

- Test high-risk intents (crisis-ish language, medication questions, minors) and run regressions every time prompts or knowledge change.

Private LLMs vs. Public APIs (Llama 3, Mistral, vs. GPT-4o)

This choice is mostly about data boundary + ops burden, not ideology.

- Public APIs: fastest to ship, strong baseline quality, fewer infrastructure headaches.

- Private / self-hosted: more control and procurement flexibility, but you inherit inference ops, security posture, and ongoing maintenance.

A useful framing (that doesn’t lie to anyone):

- The model is still a pretrained transformer at heart.

- Your differentiation comes from retrieval quality, safety constraints, and evaluation—regardless of hosting model.

Rule of thumb:

- MVP/pilot: public API + strict guardrails + RAG

- Enterprise/scale: consider private inference once workflows are proven and volumes stabilize

Vector Databases (Pinecone, Weaviate) for Long-Term Memory

“Memory” in production chatbots is usually retrieval, not permanent recall.

A vector database stores embeddings so you can retrieve relevant user context later (preferences, progress, clinician-approved summaries) without dumping raw history back into the model.

To keep “long-term memory” from becoming a liability:

-

- Store structured summaries, not transcripts.

- Separate “product preference memory” from anything clinical.

- Apply retention rules and deletion controls.

- Make consent and reset controls explicit.

What Tools Can We Use to Build a Mental Chatbot?

What tools would be most appropriate in building a mental health chatbot? It depends on a number of factors, including but not limited to:

- Whether the conversation is goal-oriented, or whether we want it to be more open-ended?

- If the conversation is goal-oriented, do we already have the conversation flow mapped out?

- How much real-life conversational data do we have on hand?

- What general features are we looking for? Do these features come right out-of-the-box, or do they have to be custom-made, specific for the application?

- What platforms do we want to integrate the chatbot with (e.g., mobile application, web application, messenger, etc.)?

Some chatbot-building systems, for example, DialogFlow, come with certain integrations out of the box.

- Do we need such features as speech-to-text (STT) or text-to-speech (TTS)?

- Does the chatbot need to support multiple languages?

- Does the chatbot need to make backend API calls to use collected or external data?

- What type of analytics do we plan to collect?

Goal-Oriented Therapeutic Bot Building

Consider using a goal-oriented chatbot if you want to guide your patient through a fixed set of questions (or procedures) during a counseling session. You should also consider this approach if you have very limited conversational data or need explicit guardrailing to frame how the conversation would flow.

DialogFlow

DialogFlow is one of the most popular dialog-building cloud platforms by Google. We can use DialogFlow’s web user interface to start with development right away (min initial coding or installation required). Then, for more advanced scenarios, they provide the DialogFlow API to build advanced conversational agents.

DialogFlow ES

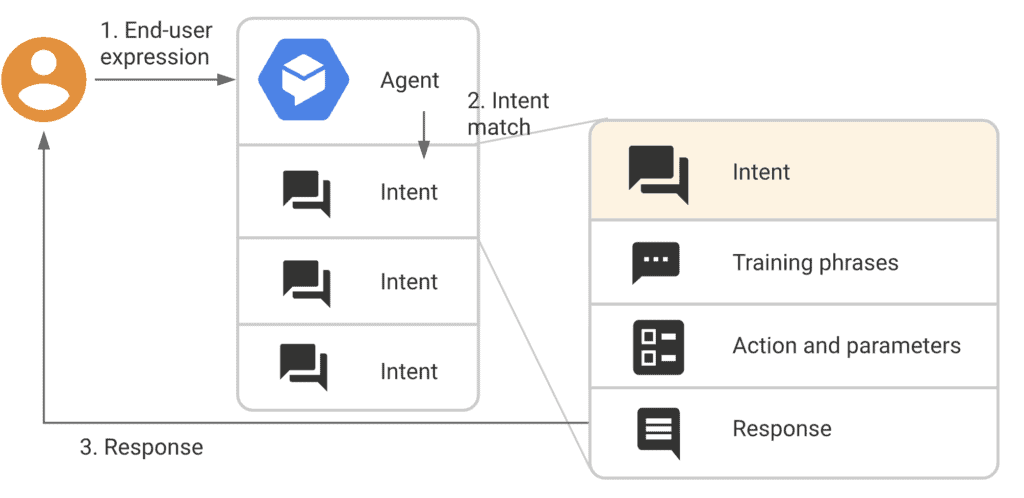

In general terms, we map out how a potential conversation can unfold. DialogFlow then uses NLP to understand the user’s intent (and collect entities) and, based on that, makes appropriate responses.

Also Read: How to Develop a Natural Language Processing App

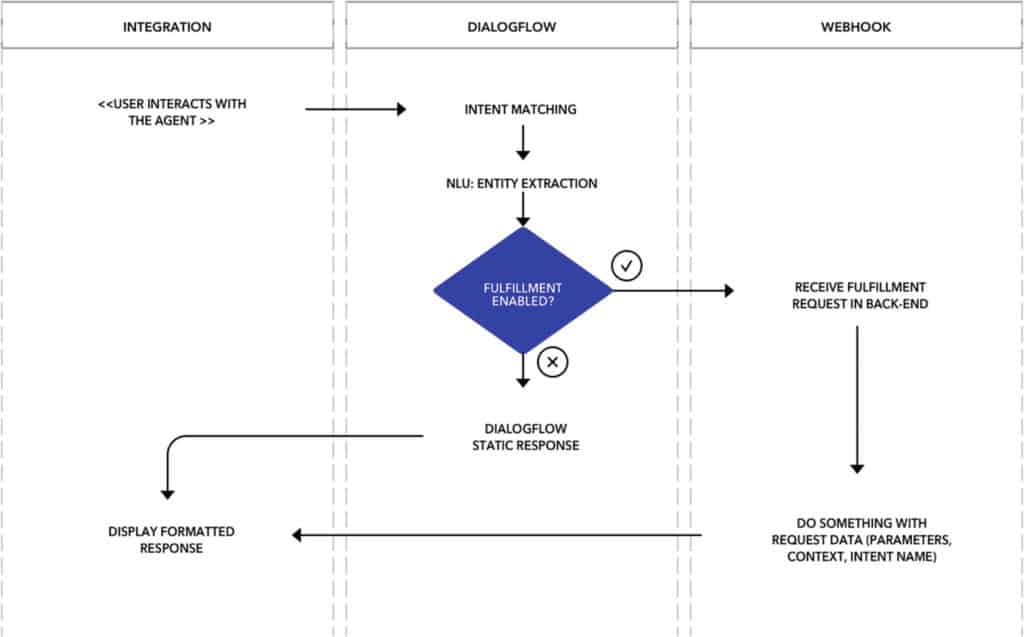

Replies are generally static (i.e., based on predefined options). However, responses can be made more dynamic by calling the platform’s backend APIs with webhook requests. Here’s how DialogFlow works in the back end:

(image credit: Lee Boonstra, from “The Definitive Guide to Conversational AI with Dialogflow and Google Cloud”)

Read more on conversational AI in healthcare.

I’d like to emphasize that the platform has the most necessary features out of the box, but the trade-off is that DialogFlow is a closed system and requires cloud communications. In other words, we can’t operate it using on-premise servers.

Some of the highlight features that come off-the-shelf include:

- support for speech-to-text and text-to-speech

- options to review and validate your chatbot solution

- integrations across multiple platforms

- built-in analytics

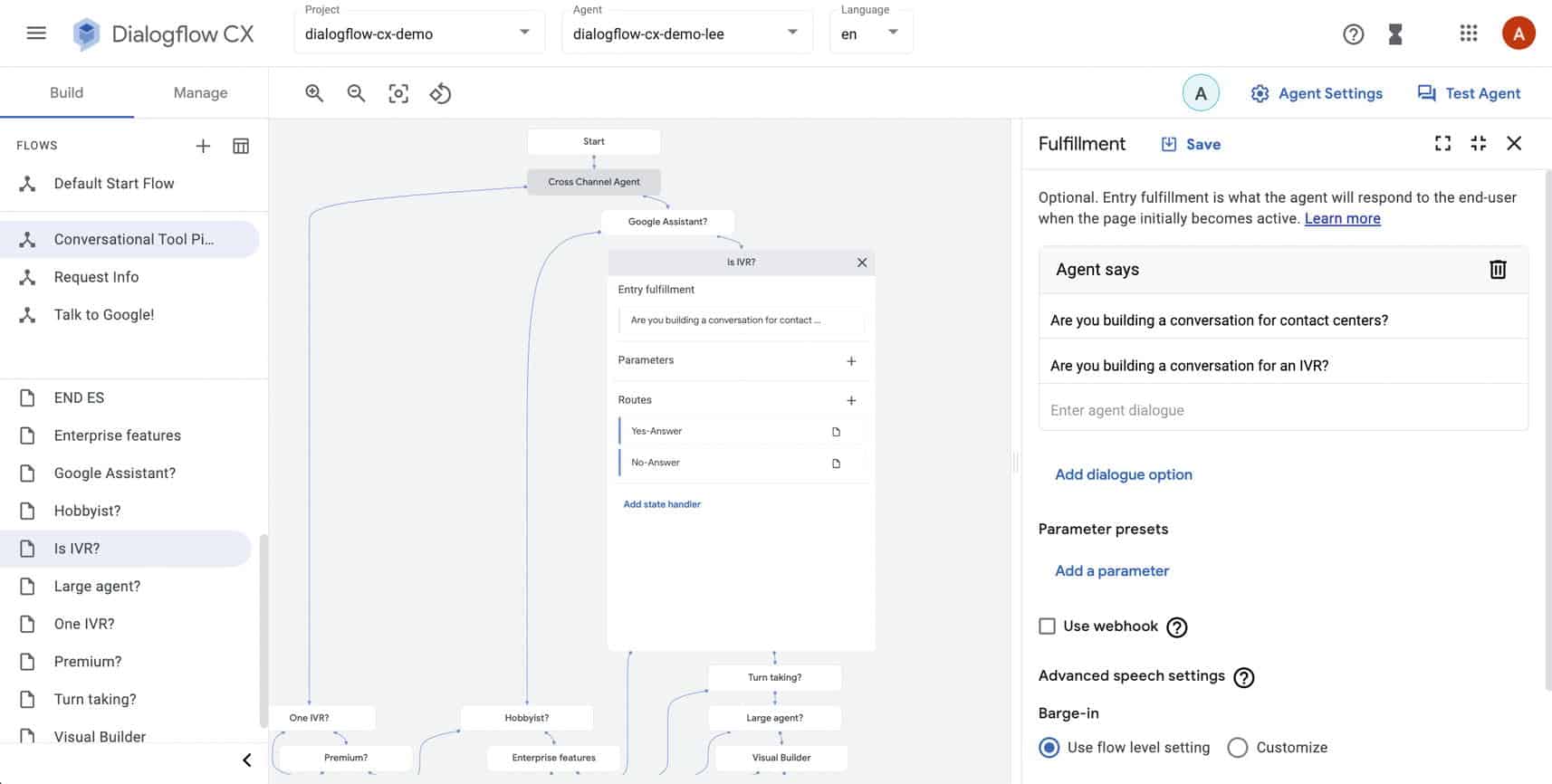

DialogFlow CX

Whereas DialogFlow ES should be reserved for simple chatbots (i.e., if you are a startup or small business), we recommend using DialogFlow CX for more complex chatbots or when developing an enterprise application. The core principles behind ES still apply to CX.

Here’s how DialogFlow CX helps you build a more advanced mental health chatbot:

- supports large and complex flows (hundreds of intents)

- multiple conversation branches

- repeatable dialogue, understanding the intent and context of long utterances

- working with teams collaborating on large implementations

Rasa

In general, Rasa would be used in scenarios where customization is a priority. The trade-off behind this customizability is that more tech knowledge (e.g., Python) is required to use these components. In addition, it requires more upfront setup (including installation of multiple components) compared to using DialogFlow (minimal or no upfront setup).

You should also keep in mind that Rasa has no or minimal user interface components that come out of the box.

Rasa has two main components: Rasa NLU and Rasa Core

Rasa NLU

Rasa NLU is fundamentally similar to how DialogFlow ES works. The platform can match user intents and collect entities / parameters during conversations. The natural language understanding gets better with more conversation data being gathered and analyzed.

The NLU components are designed with customization in line when building the pipeline: we can swap out components at different stages depending on the use-case or application needs:

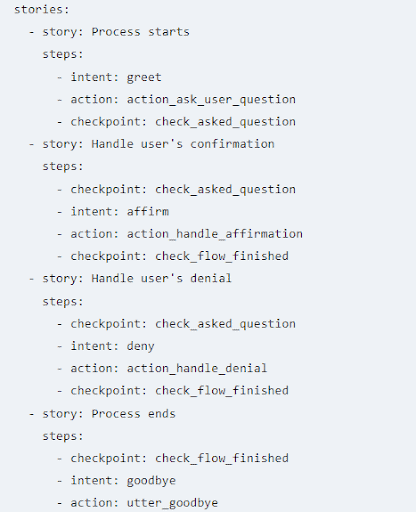

Rasa Core

Rasa Core has a more sophisticated dialog management system compared to DialogFlow. The dialogue in Rasa is more explicitly driven based on stories, which organizes the order of what intent, action, and parameters are collected during model development. It is also possible to weave multiple stories together using custom logic.

Since Rasa is a code-first framework, there is a greater degree of flexibility on how you can design conversations compared to DialogFlow. Generally, responses are static (predefined options) but can be made more dynamic using API calls.

Here are some additional features you can expect from Rasa:

- more flexibility in importing training data across multiple formats

- open-source libraries

- hostable on your own servers

- Rasa Enterprise includes analytics

Also, note these features that the Rasa platform lacks:

- no hosting provided

- no out-of-box integrations

- no speech-to-text or text-to-speech (optionally, third-party libraries)

Open-Ended Therapeutic Bot Building

We recommend creating an open-ended chatbot if you want conversations to be more in the form of chit-chatting (i.e., an AI therapist providing open-ended, empathetic conversations for those going through a rough time).

It’s strongly recommended that you have some conversational data to work with to fine-tune the model and minimize the risk that a transformer model (more on that below) will output inappropriate responses.

Fortunately, the GPT-3 and BlenderBot platforms work decently out of the box for creating open-ended dialog agents, but you get the most out of either model with fine-tuning. That’s necessary to minimize the risk of inappropriate responses.

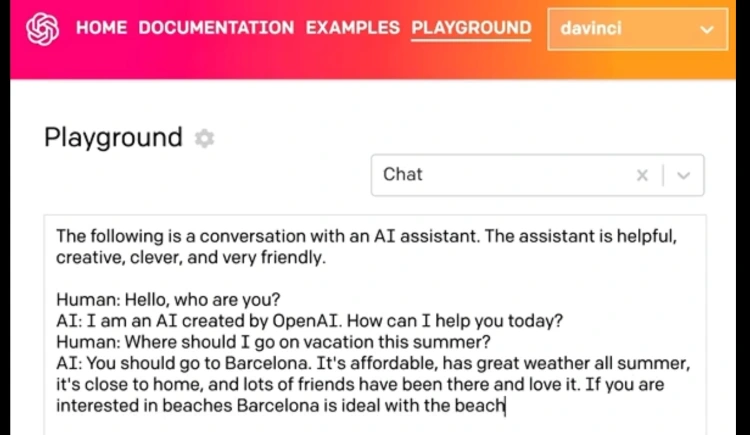

GPT-Based Models

GPT-3 uses a transformer model that is trained on large text corpora across the internet. In terms of training data, GPT-3 models are trained on a variety of text such as Wikipedia, corpora of books, and various web pages (for example, high-quality ones like WebText2 and petabytes of data such as Common Crawl).

GPT-3 is considered a game-changer in the field of NLP AI in that it can be used for a large number of NLP tasks:

- text classification

- text summarization

- text generation, etc. instead of just one specific task

Fundamentally, OpenAI’s GPT-3 changes how we think about interacting with AI models. The OpenAI API has drastically simplified interaction with AI models by eliminating the need for numerous complicated programming languages and frameworks.

The quality of the completion you receive is directly related to the training prompt you provide. The way you arrange and structure your words plays a significant role in the output. Therefore, understanding how to design an effective prompt is crucial to unlocking GPT-3’s full potential.

GPT-3 shines best when you:

- Supply it with the appropriate prompt

- Fine-tune the model based on the specifics of the problem at hand

Related: Medical Chatbots: Use Cases in the Healthcare Industry, How To Build Your Own ChatGPT Chatbot, How is ChatGPT Used in Healthcare

Also, please note some of the drawbacks when considering GPT-3 for making a mental health chatbot:

- AI bias

Since GPT-3 is trained on large amounts of data, there’s a risk of it using toxic language / saying wrong things (i.e., that are reflective of the dataset being used for training). For example, here’s a story where a GPT-3 based bot agreed that a fake patient should kill themselves. Fortunately, this issue can be addressed through careful fine-tuning (i.e., retraining the model on focused datasets so that it can emulate proper conversation behavior).

- OpenAI has a tendency to restrict API access for higher-risk applications. You’ll need permission to use their GPT-3 technology in a live app.

Consider online platforms as GPT alternatives, such as Forefront, that allow you to leverage their computational resources with ease / minimal restrictions if the above poses an issue.

Here’s a prototype of therapeutic chatbot we put together at Topflight, using a GPT-3 model:

BlenderBot

BlenderBot is a chatbot-building platform by Facebook. Its transformer model was trained on large amounts of conversational data.

BlenderBot’s main selling points include optimizations to have a personality, be empathetic, show engaging use of knowledge based on a tailored dataset, and seamlessly blend all these qualities.

Facebook strives to keep the system adaptable to scenarios when the person’s tone changes from joking to serious. Therefore, later versions of BlenderBot encode the information retrieved during convos into long-term memory (i.e., use a dedicated training set and multi-chat session). In addition, the platform can search for info on the internet and integrate that knowledge into conversations in real time.

The most accessible approach for creating a BlenderBot-based agent is to use an online service like Forefront. Alternatively, BlenderBot APIs can be found here for offline fine-tuning. However, the computational resources to run these models are very demanding. I’d highly recommend going with an online option.

Which Mental Health Chatbot Platform Should You Choose?

Most platform advice gets stuck at “rules vs AI.” In practice, the decision is simpler: are you building goal-oriented flows (triage, check-ins, CBT-style routines) or an open-ended conversation (companion-style, free-form support)? From there, the real constraints show up fast: on-prem requirements, voice support, and how complex your conversation graph is. Here’s a quick decision tree to get you started.

What kind of conversation are you building?

├─ Open-ended (empathetic “talk therapy” / companion-style)

│ ├─ Need maximum control + lowest “surprise” risk?

│ │ └─ Re-scope toward goal-oriented flows (below) + add constrained free-text slots

│ └─ OK with probabilistic replies (and willing to do guardrails + tuning)?

│ ├─ Want fastest path with decent out-of-box quality?

│ │ └─ GPT-based via API (with safety/usage constraints you must plan for)

│ └─ Want model you can fine-tune/run offline (heavier infra burden)?

│ └─ BlenderBot / other transformer models via self-hosted tooling

│

└─ Goal-oriented (structured CBT-style flows, triage, check-ins)

├─ Must be on-prem / self-hosted (policy, data control, enterprise constraints)?

│ └─ Rasa (code-first; you build more, you control more)

└─ Cloud is acceptable

├─ Need speech-to-text / text-to-speech out of the box?

│ └─ DialogFlow (lean ES for simple, CX for complex)

└─ No voice required

├─ Complex flows (many intents/branches, teams collaborating)?

│ └─ DialogFlow CX

└─ Straightforward flows (smaller scope, faster setup)?

└─ DialogFlow ES

| Platform | Best fit | Why teams pick it | Watch-outs |

|---|---|---|---|

| DialogFlow ES | Simple, goal-oriented flows | Fast to ship; strong baseline NLP for intents; easy to wire basic integrations | Cloud-first; can feel limiting once flows and teams grow |

| DialogFlow CX | Complex, branching conversation graphs | Designed for multi-step dialogs and larger projects; better structure for complex flows | More setup/overhead than ES; still cloud-first |

| Rasa | On-prem or high-control environments | Maximum customization; self-hosting; you own the pipeline and integrations | More engineering work; fewer “out of the box” conveniences |

| GPT-based via API | Open-ended, natural conversation | Best “human-like” responses quickly; great for free-form input | Requires strong guardrails, monitoring, and careful scope control |

| BlenderBot / self-hosted LLMs | Open-ended + offline/self-hosting goals | More control over model behavior and deployment environment | Heavier infra/ML burden; quality and safety require serious work |

If you’re unsure, start with goal-oriented flows on a platform like DialogFlow ES, then add carefully constrained AI responses only where they meaningfully improve the experience.

12-Week Mental Health Chatbot Development Plan

This is the “no-magic” version of a launch plan: clear deliverables each week, a safety-first posture, and enough structure to keep you from shipping a clever demo that falls apart the first time a real user types something messy. One note up front: if you’re touching anything that looks like PHI, treat privacy/security as a build constraint, not a “week 12 task.”

Weeks 1–2: Discovery & Planning

Goal: decide what the bot is (and what it is not) before you write a single prompt.

-

Define primary use cases (crisis support vs. daily check-ins) and the “do not attempt” list (diagnosis, medication advice, etc.).

-

Map conversation flows for your top scenarios: happy path, confused user, angry user, drop-off, re-engagement.

-

Choose technology direction (DialogFlow vs. GPT-3) based on risk tolerance, explainability needs, and ops overhead.

-

Align on measurement: what success looks like in a pilot (completion rate, handoff rate, safety flag rate, retention).

-

Budget: $5,000–$10,000

Outputs: scope doc, flow diagrams, risk register, and a first-pass safety policy (what triggers escalation, what gets blocked, what gets handed to a human).

Weeks 3–4: Content Development

Goal: build the clinical “voice,” guardrails, and escalation logic before you wire it into a platform.

-

Create 100+ therapeutic responses organized by intent (validation, reframing, grounding, next-step prompts, etc.).

-

Develop crisis escalation protocols (high-level: when to stop, when to hand off, and how to route the user to real-time help).

-

Review language and protocols with clinical psychologists for tone, safety, and appropriateness.

-

Cost: $8,000–$15,000

Outputs: response library, intent taxonomy, escalation decision tree, and a review log (what was approved, what was rejected, why).

Weeks 5–8: Technical Development

Goal: implement flows + safety rails in a way that’s testable and maintainable.

-

Set up NLP platform and the conversation state model (so the bot remembers what matters, and forgets what shouldn’t be stored).

-

Implement conversation flows and fallback handling (unclear input, off-topic, repetitive loops).

-

Add safety guardrails: blocked content categories, safe-completion behaviors, confidence thresholds, and handoff triggers.

-

Integrate with messaging platforms (or keep it single-channel for the pilot—multi-channel too early can be a tax).

Outputs: working chatbot in a staging environment, logging/analytics baseline, admin tooling for content updates, and a clear handoff path to humans.

Weeks 9–10: Testing & Safety

Goal: prove the bot behaves safely in edge cases, not just in curated demos.

-

Test with 50+ scenarios (including adversarial inputs, slang, ambiguity, and “user says nothing useful”).

-

Clinical review of responses as implemented (things change once the bot is stitched together).

-

Edge case handling: retries, escalation loops, refusal behavior, and safe exits.

-

User acceptance testing with your intended audience and stakeholder sign-off.

Outputs: scenario test suite, issue backlog with severity, approved release criteria, and a “known limitations” statement you’re willing to publish.

Weeks 11–12: Launch Preparation

Goal: ship a controlled pilot with support readiness and compliance basics in place.

-

HIPAA compliance audit (or a documented rationale if HIPAA doesn’t apply—either way, write it down).

-

Terms of service finalization and user-facing disclaimers (plain English, not legal poetry).

-

Support documentation: escalation routing, incident response, who monitors what, when.

-

Soft launch with beta users, monitored hours, and a rollback plan.

Outputs: pilot release, monitoring dashboard, support runbook, and a week-by-week iteration plan based on real transcripts and metrics.

Examples of Successful Mental Health Chatbots

Let’s review some successful mental chatbots out there.

Replika

Replika is an AI personal chatbot companion developed in 2017 by Kuyda. The app’s purpose is to allow the user to form an emotional connection with their personalized chatbot and develop a relationship unique to the person’s individual needs.

How does Replika work?

It was initially entirely programmed based on scripts that the engineer had to work with. However, gradually, the team became more reliant on using a neural network (their own GPT-3 model) as they gathered more conversation data from end users. As a result, their system now uses a mix of scripted and AI responses.

-

Scale (Android): Google Play lists 10M+ downloads, 4.3 stars, and ~522K reviews (page shows updated Jan 6, 2026).

-

User-base claim (company leadership): Replika’s CEO said the product has over 30 million users (Aug 2024 interview).

-

Reality check on compliance risk: Italy’s regulator fined Replika’s developer €5M in 2025 over data/privacy issues.

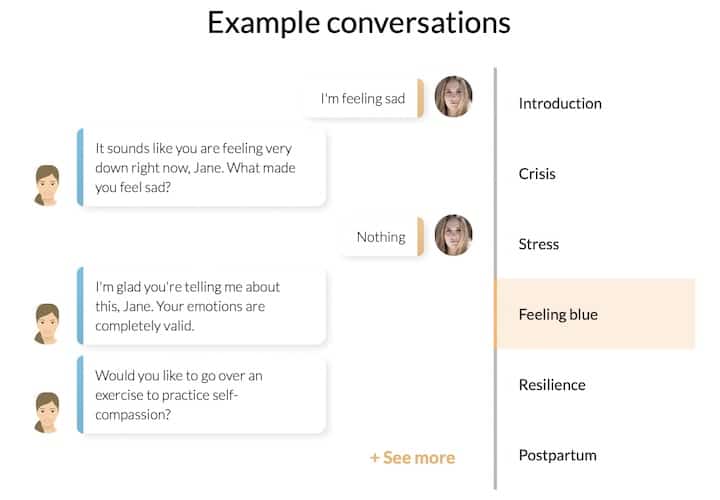

Woebot

Developed in 2017 at Stanford by a team of psychologists and AI experts, uses NLP and CBT (cognitive behavioral therapy) when chatting with the user about how his or her life has been over a ten-minute (max) conversation.

Keeps track of all texts and emojis, so responses become more specific over time (referencing previous conversations).

Early results show that college students who interacted with Woebot had reduced the symptoms of depression significantly in two weeks.

Raised over 90 million dollars in series B funding as of June 2021 and received FDA breakthrough device designation in May 2021 to treat postpartum depression.

-

Scale: Woebot says 1.5+ million downloads.

-

Clinical signal (early): A 2017 randomized trial reported a significant reduction in depression symptoms (PHQ-9) vs an information-only control over 2 weeks, with a moderate between-groups effect size (d=0.44).

-

Engagement (in that trial): Participants used the bot ~12 times on average over the study period; reported attrition was 17% at follow-up.

-

Regulatory path: Woebot Health announced FDA Breakthrough Device Designation (May 25, 2021) for WB001, an investigational digital therapeutic for postpartum depression.

Wyza

Developed in 2015 in India to facilitate counseling based on the principles of CBT and mindfulness → generally available through individuals under a freemium model and through employer benefit programs. The chatbot recommends specific exercises based on the user’s ailment.

Tries to address a need for mental health triaging in low-income countries (shortage of mental health specialists) and high-income countries (6-12-month waitlists before a patient gets help).

The target demographic is generally those in the “missing middle,” where mindfulness apps are not enough but are not in need of psychiatric or medical evaluation.

Wyza was admitted into the FDA Breakthrough Device in 2022 Designation. Clinical trials show that it is “just as comparable to in-person psychological counseling.”

-

Scale: Wysa reports 6M+ users (and “across 95 countries” on its site pages).

-

Outcomes (real-world): In a large in-app pre/post subset (PHQ-9 n=2,061; GAD-7 n=1,995), a JMIR Formative Research retrospective analysis reported statistically significant improvement with medium effect sizes.

-

Regulatory signal: Wysa announced FDA Breakthrough Device Designation (May 12, 2022) for a program targeting chronic pain with comorbid depression/anxiety.

X2AI

AI-powered mental health chatbot written by psychologists. The bot provides self-help chats through text message changes, similar to texting with a friend or a coach.

Over 29 million people have paid access (check their website).

In 2016, they started using these chatbots to provide mental support for refugees during the Syrian war.

X2AI also aims to target veterans and patients with PTSD. There is a treasure trove of research that shows that their chatbot has reduced symptoms of depression.

Raised series A funding (undisclosed) in 2019.

-

-

Clinical signal (RCT): A randomized controlled trial recruited 75 participants across 15 U.S. universities and reported reductions in depression (PHQ-9) and anxiety (GAD-7) versus an information-only control over 2–4 weeks.

-

Engagement at scale (pilot RCT): In an 8-week university study in Argentina, participants in the intervention arm exchanged an average of ~472 messages with the chatbot (about ~116 user-sent on average).

-

Also Read: How to build a chatbot

Monetization Models for 2026

If you’re building a mental health chatbot for a therapist practice (or a product that serves practices), monetization usually lands in one of three buckets: sell to employers, sell a hybrid subscription, or pursue reimbursement. Each model comes with its own product requirements—especially around safety, clinical oversight, and compliance.

B2B Employee Wellness: Selling Licenses to Corporations

Employer-sponsored distribution is one of the most reliable go-to-market paths in 2026 because demand is unmistakable: in Business Group on Health’s 2024 large-employer survey, 77% of large employers reported increased mental health concerns, and employers were highly focused on access to mental health services.

KFF’s 2024 Employer Health Benefits Survey also shows employers actively expanding access: 48% of large employers increased mental health counseling resources for employees via an employee assistance program (EAP) or third-party vendors—exactly the procurement channel most B2B mental-health products plug into.

Employer-sponsored mental wellness platforms are also now common enough to be evaluated in medical literature, which is a good signal you’re not betting your business on a fad.

How teams typically package it:

- Per-member-per-month (PMPM) licensing or annual seat-based contracts.

- Tiered access: “AI support + resources” for the broad population, plus higher tiers for therapy access, care navigation, or higher-touch programs.

- Enterprise buying expectations: security posture, reporting, and predictable outcomes language (not “our bot is empathetic”).

The “Freemium + Human” Model: Free AI Chat, Paid Human Sessions

This model fits therapist-led offerings because it mirrors how people actually test care: they want a low-friction first step before committing.

A common structure:

- Free: AI chat for intake, psychoeducation, check-ins, lightweight skill practice, and routing.

- Paid subscription: human therapist video sessions (or messaging) bundled with AI support between sessions.

Why it works:

- The AI layer lowers the barrier to starting care (stigma + scheduling friction), while the paid tier anchors value in what clients ultimately trust most: a licensed clinician.

- It aligns with “hybrid care” operations: AI does the repetitive front door; clinicians focus on the moments that need judgment.

Insurance Reimbursement (CPT Codes): When DTx Can Be Billed (If FDA-Cleared)

This is the “hard mode,” but it’s getting less hypothetical. In the U.S., reimbursement typically requires (a) the product to be treated as a regulated clinical intervention (often FDA-cleared/approved when applicable), and (b) the workflow to fit existing billing pathways.

Two useful signposts:

- The APA has summarized emerging reimbursement pathways for digital therapeutics, including new codes approved by CMS for certain digital mental health interventions.

- Medicare has also recognized FDA-cleared prescription digital behavioral therapy via HCPCS coding (e.g., A9291).

Practical reality check:

- Coverage varies by payer, and “having a code” doesn’t guarantee payment at a rate that makes your unit economics work.

- If you’re not aiming for FDA-cleared DTx, reimbursement is usually not your first monetization lever—employer licensing or hybrid subscription tends to be faster.

What Does a Mental Health Chatbot Cost to Build?

If you’ve never shipped clinical-ish software before, the fastest way to blow up your budget is to treat “chatbot” as a single feature. It’s not. The bot is the front door. The expensive part is everything you don’t see: safe data handling, clear conversation boundaries, escalation paths, auditability, and the boring-but-decisive work of making sure the system behaves the same way at 2 PM and at 2 AM.

A more useful way to think about cost is to decide what “done” means for your first release. Is this a self-contained assistant with scripted flows and minimal data retention? A rules engine that needs maintainable conversation logic and analytics? Or an AI-led experience that needs stronger guardrails, monitoring, and a human-in-the-loop fallback? Each step up isn’t just “more smart.” It’s more risk to manage, more QA to run, and more operational load after launch.

The table below isn’t a quote. It’s a scope-to-effort map you can use to sanity-check vendors, align stakeholders, and avoid the classic trap of budgeting for a demo and then discovering you actually needed a product.

| Feature Level | Technology Stack | Development Time | Cost Range | Maintenance/Month |

|---|---|---|---|---|

| Basic Scripted Bot | DialogFlow ES | 2-3 months | $15,000-$30,000 | $500-$1,000 |

| Advanced Rule-Based | DialogFlow CX/Rasa | 4-6 months | $40,000-$80,000 | $1,500-$3,000 |

| AI-Powered (GPT-3) | OpenAI API + Custom | 6-9 months | $80,000-$150,000 | $3,000-$5,000 |

| Enterprise Clinical | Custom NLP + FDA | 12-18 months | $200,000-$500,000 | $5,000-$15,000 |

If you want to discuss your therapeutic chatbot idea with a company that prioritizes your business’s growth besides product development, contact us here.

[This blog was originally published on 8/31/2022 and has been updated for more recent content]

Frequently Asked Questions

What is a mental chatbot?

An AI assistant that’s not meant to replace a human therapist but can provide initial advice by gathering patient data and providing them with replies.

What tools do you recommend for creating a chatbot?

DialogFlow, Rasa, GPT-2.

How long does it take to develop a mental health chatbot?

MVP: 3-4 months. Production-ready: 6-9 months. Enterprise with FDA consideration: 12-18 months.

What platforms work best for mental health chatbots?

Mobile-first (native app or mobile web) is usually the best starting point. Web portals are common for employer/clinic rollouts and admin workflows. Messaging channels (WhatsApp/Messenger/SMS) can be great in markets where users already live in chat—treat them as an add-on, not the foundation.

How much does it cost to build a mental health chatbot?

Typical ranges depend on scope and risk. A realistic MVP is $30,000-$80,000 if you keep it focused (one core workflow, basic safety escalation, limited integrations, and a simple admin view). A more advanced AI chatbot is usually $80,000-$150,000+ once you add richer clinical workflows, stronger safety + monitoring, analytics, and multiple channels. Enterprise builds often start at $200,000+ when you include employer/clinic-grade security and reporting, integrations (EHR/scheduling/billing), multi-tenant admin tooling, and readiness for FDA-adjacent claims or validation.

How much does it cost to build a mental health chatbot MVP?

For an MVP, plan on $30,000-$80,000. To stay in that range, keep the scope tight: one target user group, one primary workflow (intake + routing or between-session support), a minimal UI, basic escalation logic, and minimal data retention. Costs jump when you add EHR integrations, therapist scheduling + billing, messaging/video, multi-state rollout requirements, or deeper reporting and admin workflows.

Is ChatGPT HIPAA compliant for therapy apps?

By itself, no. HIPAA compliance is an end-to-end system property: infrastructure, access controls, logging, encryption, retention/deletion, vendor contracts (including BAAs), and how you handle transcripts and identifiers. If you use an LLM in a therapy app, treat it as one component inside a HIPAA-ready architecture and only send the minimum necessary data. Always confirm whether your LLM/API vendor will sign a BAA for your use case before any PHI is involved.

How do I get FDA clearance for a mental health app?

Start by defining intended use and claims. FDA oversight is driven by what you claim the software does (diagnose, treat, prevent, mitigate) and how it functions. If you’re in “digital therapeutic / SaMD” territory, expect to build a Quality Management System, run verification/validation, and produce evidence proportional to risk (often including clinical evaluation). Many teams begin with a regulatory positioning memo and a pre-submission strategy before committing to the full pathway.

What is the best AI model for mental health: GPT-4 or Claude?

There’s no universal “best.” Choose based on safety controls, consistency, latency/cost, and how well the model behaves inside your guardrails. For mental health use cases, what matters most is your safety architecture (escalation logic, restricted response modes, and runtime validation), plus your testing process across edge cases. Many teams prototype with more than one model and select the final option based on measured performance in their specific workflows and risk scenarios.