So, you’ve got a great idea for a healthcare app. You’ve identified a need, recruited a rockstar healthcare app development company, and maybe even built a prototype. But now you’re starting to build the infrastructure for the veritable fire hose of data that you’re sure to be collecting from your users, and a critical question halts your progress: “Can I use Machine Learning to make this better?”

The answer is undoubtedly: “Yes!”

But how? How can Machine Learning be used in healthcare?

The vast space of Machine Learning and its associated techniques is expanding every day, so knowing how to choose between them all can feel like a sisyphean task. Do you need NLP or Computer Vision? Deep Learning or Random Forests? Should you use an off-the-shelf API or use hand-rolled models? The list goes on.

Luckily, knowing what type of data you’re collecting and what particular need you have for machine learning can make your decision (almost) a no-brainer. To get a better idea of the kinds of technologies that burgeoning healthcare apps are relying on, let’s take a look at the different areas of study within the Machine Learning space, as well as examples of their successful application in medicinal research.

Key Takeaways:

- If your app’s business logic fits within a set of rules, you don’t necessarily need machine learning capabilities in your app.

- One of the biggest challenges with developing an ML app is deploying it to production. That involves coordination between DevOps engineers and data scientists to set up seamless transition mechanisms from staging to production.

- NLP and convolutional neural networks are the most promising applications of AI in healthcare.

- The go-to frameworks that support the straightforward implementation of ML capabilities in mobile apps include Tensorflow Lite, Core ML, and Caffe2Go.

Table of Contents:

- Difference in Use Between AI, Deep Learning, and Machine Learning for the Medical Field

- Advantages of Machine Learning and Deep Learning in Healthcare

- The Ethics of Machine Learning Algorithms Used in Healthcare

- The Challenges of the Healthcare Projects Using Machine Learning

- Top 3 Examples of Machine Learning in Healthcare

- Our Experience in Developing Machine Learning Healthcare Applications

Difference in Use Between AI, Deep Learning, and Machine Learning in the Medical Field

AI, machine learning, deep learning, neural networks — there’s a lot to wrap your head around before you form a solid understanding of how computer brains advance modern medicine.

How about we start by explaining these terms?

AI stands for artificial intelligence and broadly describes any instance of a computer emulating human brain activity, whether recognizing and making sense of images, text, voice, etc.

Machine learning is a subset of AI that introduces the concept of teaching machines by having them analyze large amounts of data. At its core, any ML product is based on an algorithm that helps predict different outcomes based on the analysis of historical data.

Deep learning is a subset of ML but with a more complex architecture. Deep learning solutions work off neural networks — a combination of ML algorithms assembled in a hierarchical order to simulate neural connections in the human brain. To imagine a deep learning application in medicine, think about a patient scheduling app that predicts no-shows based on incoming data and also learns from its previous mistakes on its own.

In a nutshell, AI, ML, and DL all describe the same thing, only at a different level of abstraction. That’s why the terms are so often used interchangeably.

Advantages of Machine Learning and Deep Learning in Healthcare

Natural language processing: making sense from text

Natural Language Processing lies at the intersection of “computer science, information engineering, and artificial intelligence.” As the name might imply, it deals with language interpretation by computers, either via text or voice analysis.

In a nutshell, NLP is used primarily to identify key elements (entities, intent, relationships) in free-form text and make that data usable by computer systems in various ways. Digital personal assistants like Siri, Cortana, and Alexa make heavy use of concepts in NLP to do what they do.

Check out our article about NLP app development

Similar to the recent explosion of personal assistant AI in the consumer market, the healthcare industry is seeing a particularly remarkable surge in apps that use NLP to give our devices and physicians a better understanding of our health.

One popular example in recent years? Healthcare chatbots.

By using the previously mentioned entity recognition to extract structured data from a piece of text, and applying supervised learning classification algorithms to interpret the data’s meaning, chatbots can “understand” what the user wants to do while also collecting and storing the information they need to do it.

In doing so, chatbots allow users to use the app by simply having a conversation instead of filling out forms and selecting from dropdown menus. It’s interesting to note that there has been a particularly striking uptick in use for the management of mental health, perhaps because of this more intimate interaction model.

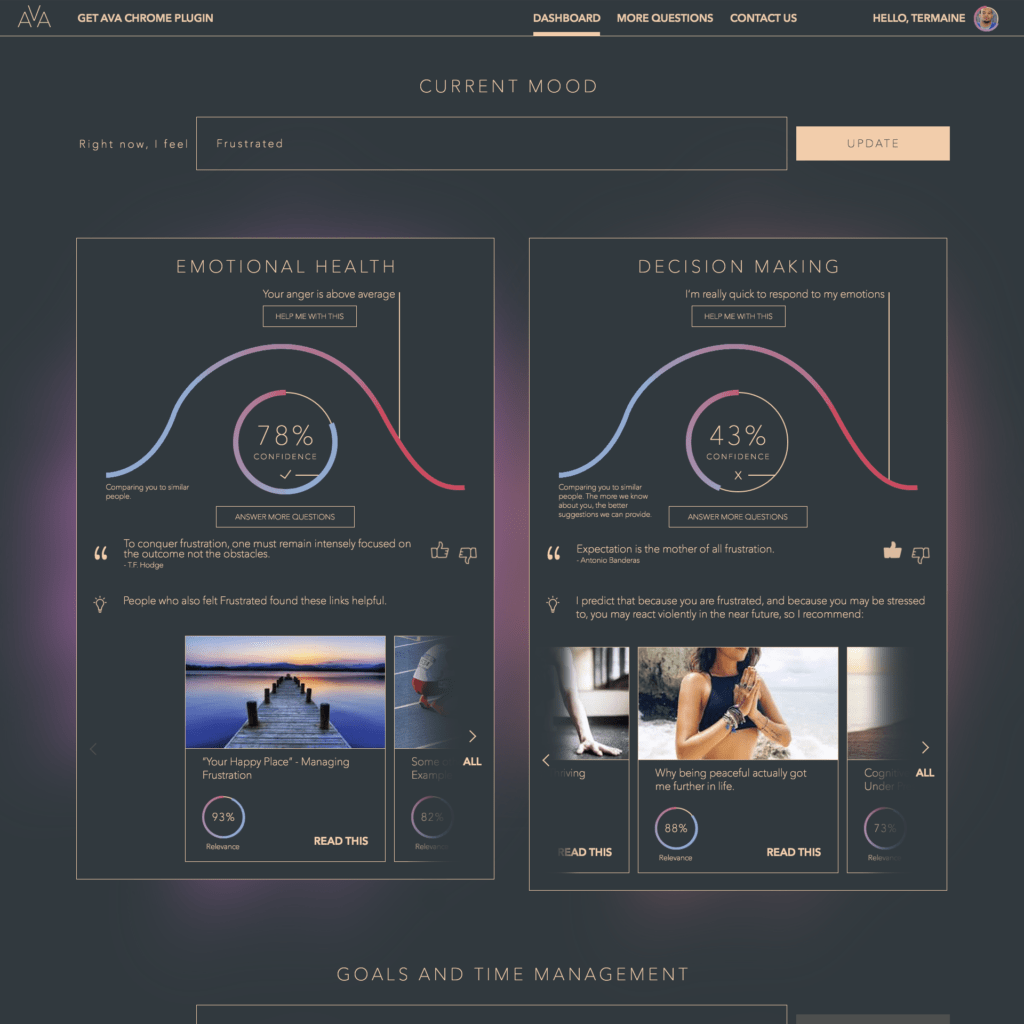

XZEVN–a “well-being self-management” app–wants to give professionals a better understanding of their overall well-being and identify when and how to intervene. Topflight helped them do just that by developing a chatbot with them. The chatbot uses information about a user’s mental state to recommend content from around the web to help manage their stress and bolster their decision-making. By taking into account the user’s own stated goals, the chatbot also allows them to improve their mental health according to their own needs.

But that’s just one example. Another is Tess from X2, who can have natural-sounding conversations with patients about their mental health, give out surveys to patients on behalf of their psychiatrist, and help patients meet clinical goals alongside healthcare professionals. Acting as a friendlier way to populate a patient’s EHR by simply having a conversation and decreasing patient levels of depression and anxiety along the way.

Once a labor-intensive job necessitating complicated code and advanced domain knowledge, building chatbots can now be easily accomplished with one of the half a dozen frameworks available from big players like Microsoft, Facebook, Google, and Amazon. But if free and extensible is your jam instead, Rasa is an open-source chatbot framework that does just as well as the big names and with more customization to boot.

Chatbots may be capable of having natural-sounding conversations, but they still require the user to be guided down pre-written conversational paths. So what if the structured nature of chatbot conversations isn’t what you need? What if you need an open-ended AI that can respond to any question and give answers to them like an expert?

Well, first of all, you’ll need a fair amount of already labeled data for your domain. But what you need is a called a Question Answering System. Yes, that’s really what they’re called.

The unimaginative name aside, they can be fabulously complex, involving deep bi-directional autoencoders requiring 4 days of training time on the latest hardware built for neutral networks, or they can be relatively simple. One fairly inspirational example of a simple but effective QA system is Jill Watson, a teaching assistant who answers course questions with a 97% accuracy rating by drawing on years worth of previous questions and answers in an online forum.

The real testament to how far NLP has come is that Jill managed to fool her class of students studying AI into thinking she was a human TA, at least for a while.

Convolutional neural nets: images, diagnoses, and time-series. Oh my!

Okay, NLP is cool and all, but what if your medical data isn’t text-based? What if you have loads and loads of MRI, x-ray, or other diagnostic images to process and draw insights from?

Well, as I’m sure you’re aware, the field of image recognition (and segmentation, and even generation) has exploded in recent years. And rest assured, the recent advances in this field aren’t all labeling traffic signs and slapping Steve Buscemi’s face on a video of Jennifer Lawrence.

No, in fact, deep convolutional neural networks for image classification have shown almost magical levels of diagnostic accuracy in medicine. From breast cancer to retinopathy to live labeling of polyps during colonoscopies, deep learning proves that human-level understanding of images may not be the Strong-AI problem that it was once thought to be.

But it’s not all x-rays and mammograms in the medical deep-CNN space. Companies like Face2Gene are using facial recognition and images taken by smartphones to classify phenotypes and accurately diagnose developmental syndromes. Chances are, if you have image data to analyze, CNNs are the way to go to reach state-of-the-art performance.

But it’s not all x-rays and mammograms in the medical deep-CNN space. Companies like Face2Gene are using facial recognition and images taken by smartphones to classify phenotypes and accurately diagnose developmental syndromes. Chances are, if you have image data to analyze, CNNs are the way to go to reach state-of-the-art performance.

However, keep in mind that like all deep learning methods, deep convolutional networks require loads of training data to reach the levels of diagnostic certainty seen in the above examples. Thankfully, using Google’s repository of pre-trained deep convolutional networks alongside your own data can drastically cut down on the need for training samples in your chosen domain. Hurray for transfer learning!

On top of image recognition–with the modern ubiquity of sensors in phones and smartwatches tracking us every day–the analysis of time-series data is becoming increasingly relevant. Because convnets, by their very design, seek to take advantage of the structure of data, they are perfect for the task. Time-series forecasting and classification, though perhaps not as flashy in the media, time-series forecasting and classification is another field that is seeing state-of-the-art results from Deep CNNs.

Topflight even has experience in doing just that. For one of our clients, we took EEG data containing the Delta, Theta, Alpha, Beta, and Gamma waves from a number of EEG electrodes — totaling 17430 data points each for a number of patients over time — and used that medical data to predict what conditions a patient might have. Once trained, our model could predict what condition a new patient coming into the system might have and help reduce the time to diagnose the patient.

Beyond deep learning

Auto-encoders, entity-recognition, image recognition, pre-trained deep convolutional neural networks, it’s all too much! Despite what popular trends might have you believe, Machine Learning is more than just deep neural networks.

In fact, some of the simplest machine learning algorithms can be used to great effect to do things like categorize patients based on their behavior, predict healthcare costs, and even determine whether patients will skip their next appointment. Even Watson, the AI that managed to beat Jeopardy champion Ken Jennings, ultimately uses a logistic regression model (and some fancy NLP and hard-coded rules) to make its predictions.

Some of these less complex machine learning algorithms may be far better suited to the custom mobile app development space. Simpler algorithms tend to have smaller profiles, quicker execution times, and better explainability, which is especially important for the healthcare space, and which deep neural nets struggle with in particular. Whether these factors affect your decisions at the end of the day depends on how you decide to integrate your algorithm of choice into your app: by serving the model on the mobile device itself or server-side in the cloud.

If you’d like to serve your model on the user’s mobile device itself, a smaller model with faster execution times would be ideal. Decision trees, small neural networks (also called multilayer perceptrons), linear or logistic regressions are the way to go here. Not only are these algorithms eminently simple to explain, once trained, but they also tend to have a much quicker execution time and smaller memory profile.

Of course, you can always serve your trained models as a batch process or from an API call. Tutorials on how to do that are everywhere. However, as anyone who has deployed machine learning at any kind of scale can tell you: training models is a piece of cake compared to the complexity of deploying trained models in the cloud.

So if your use case allows you to implement your trained models on a users’ mobile device, despite the potential pitfalls, that means less infrastructure to manage, fewer network hops for your users, and a faster time to prediction leading to a better user experience.

All hope is not lost for those that wish to deploy deep learning models on mobile devices, either. Many of the popular deep learning frameworks have turned their eye to the mobile space in recent years. Tensorflow Lite, Core ML, and Caffe2Go are just some of the frameworks allowing you to easily get your deep learning model onto a mobile device. They each make use of techniques like model compression, reducing input size, and constraints on network size to make it happen.

The Ethics of Machine Learning Algorithms Used in Healthcare

Besides the technical complexity, building a healthcare ML application also involves tackling several ethical challenges:

- privacy

- fairness

- transparency and conflicts of interest

The privacy challenge should be effectively addressed on the regulatory level by complying with the HIPAA rules and securing patients’ PHI (protected health information).

The fairness aspect of ML development has to do with supplying representative data for adequate machine analysis. We need to acknowledge that some algorithms may work better or worse for specific groups of populations.

Transparency and conflict of interests challenges presuppose appropriate recording and reporting of machine learning model performance metrics. Potential bottlenecks here include the “black-box” nature of ML algorithms, which makes them hard to interpret, and total dependence on the size and features of a dataset and type of available variables.

The Challenges of the Healthcare Projects Using Machine Learning

Deep learning projects in healthcare need to solve quite a few issues before AI can have complete domination over traditional technologies.

- Retrospective versus prospective model training

Machine learning applications usually train their models based on historical data. When switched to real-world data, they tend to output worse results simply because the training and real-world data differ. Wearable technology, when applicable, can be the tool that helps ML algorithms adjust to production settings.

Also check out our article about machine learning app development.

- Metrics don’t translate into clinical applicability

As no single measure fully describes all the properties of a machine learning model, we must rely on several measures to summarise a model’s performance. However, none of these measures point out what’s most important to patients, namely whether using the model leads to a positive change in patient care.

- Datasets shift

Predictive algorithms applied in a healthcare practice don’t exist in a vacuum. They need to account for changes in the settings (shifting patient populations and evolving operational practices) and the changes introduced by the ML algorithms themselves.

- Logistical issues in implementing AI solutions

This challenge exists due to the siloed and inconsistent nature of health data that’s often distributed among several standalone platforms. Therefore, aggregating and cleansing health data for machine learning is a must-have attribute of any medical ML project.

- Limited algorithmic interpretability

One of the pain points of implementing deep learning in the medical field is the inability to interpret the decision-making process in an understandable way by healthcare professionals.

For example, machine learning algorithms used in healthcare data analytics may highlight certain trends but can’t explicitly trace how they arrived at these conclusions.

Top 3 Examples of Machine Learning in Healthcare

Despite all the challenges we’ve just mentioned, the machine learning and healthcare industry seem to be a match made in heaven. As we already said, the most promising opportunities in machine learning for healthcare technologies lie in the areas of natural language processing and computer vision.

Let’s review a couple of examples of machine learning in healthcare projects.

PathAI

Founded: 2015.

Headquarters: Cambridge, Massachusetts.

ML application: PathAI’s uses of machine learning in healthcare applications help pathologists make faster diagnoses with greater accuracy and determine patients who may benefit from new therapies.

KenSci

Founded: 2015.

Headquarters: Seattle, Washington.

ML application: KenSci develops an AI-powered risk prediction platform helping healthcare providers and payers identify clinical, financial, and operational risks to save costs and lives.

Quantitative Insights

Founded: 2010.

Headquarters: Chicago, Illinois.

ML application: The company works on improving the accuracy and speed of breast cancer diagnosis by using its AI-assisted breast MRI product Quantx.

Hopefully, these deep learning applications in healthcare can spur your imagination as to what is feasible with AI and ML in healthcare.

Our Experience in Developing Machine Learning Healthcare Applications

One of the exciting ML healthcare applications we’ve built at Topflight was an AI-assisted mental health chatbot. Our role in the project was to build a web and mobile app that could offer AI-driven assistance and recommend learning materials based on user input.

The user can enter how they are feeling through multiple channels, and the app uses a combination of a Restricted Boltzmann Machine algorithm and a K-Nearest Neighbors algorithm to make predictions on what content would help the user improve their mental state.

Conclusion

Whether you’re building a Chatbot to augment your users’ therapy sessions, analyze EEG data from their peripheral devices, or screen their medical or brain scans for anomalies, the healthcare industry has a deep need for machine learning solutions. More opportunities are opening up for an enterprising app developer every day, from the clinic to the app store.

When it all comes down to it, though, the machine learning algorithm you choose for your killer healthcare app depends on the data you collect, what functionality you want your app to have, and what the limitations of your architecture are going to be. Topflight can help you navigate these complexities with ease. We’ll narrow down the space of possibilities and get you from idea to execution faster than a decision tree can spit out a prediction.

Okay, maybe not THAT fast. But just take a look at our previous work, and when you’re suitably convinced, you can get started with a proposal right away on our website. We look forward to hearing from you!

Related Articles:

- Healthcare mobile app design guide

- Build a HIPAA Compliant Application

- Doctor Appointment App Development

- How to build a Mental Health Application

- Python in Healthcare App Development

- How to create a telehealth application

- A Guide to Collecting Healthcare Data

- Healthcare App Development Guide

[This post was originally posted in February 2019, and has been updated in July 2021 with more recent content]

Frequently Asked Questions

Do you recommend keeping ML processing in the cloud or on-device?

Modern smartphones feature hardware chips specifically optimized for neural network-based computations. Therefore, if you plan to output prediction results in a mobile application, it makes sense to run ML operations on a smartphone.

Who do I need on a team to build a successful AI-powered application?

Besides the traditional roles of software developers, QA engineers, and UX/UI designers, you will also need data scientists, who work with data to create machine learning models.

What are the best big-data healthcare use cases for machine learning today?

Everything that has to do with automatic recognition and manipulation of medical imagery, OCR, and natural language processing.